#1 in a series of articles about wander and jitter

When many people (including me…) explain how digital audio “works”, they often use the analogy of film. Film doesn’t capture any movement – it’s a series of still photographs that are taken at a given rate (24 frames (or photos) per second, for example). If if the frame rate is fast enough, then you think that things are moving on the screen. However, if you were a fly (whose brain runs faster than yours – which is why you rarely catch one…), you would not see movement, you’d see a slow slide show.

In order for the film to look natural (at least for slowly-moving objects), then you have to play it back at the same frame rate that was used to do the recording in the first place. So, if your movie camera ran at 24 fps (frames per second) then your projector should also run at 24 fps…

Digital audio isn’t exactly the same as film… Yes, when you make a digital recording, you grab “snapshots” (called samples) of the signal level at a regular rate (called the sampling rate). However, there is at least one significant difference with film. In film, if something happened between frames (say, a lightning flash) then there will be no photo of it – and therefore, as far as the movie is concerned, it never happened. In digital audio, the signal is low pass filtered before it’s sampled, so a signal that happens between samples is “smeared” in time and so its effect appears in a number of samples around the event. (That effect will not be part of this discussion…)

Back to the film – the theory is that your projector runs at the same frame rate as your movie camera – if not, people appear to be moving in slow motion or bouncing around too quickly. However, what happens when the frame rate varies a just little? For example, what if, on average, the camera ran at exactly 24 fps, but the projector is somewhat imprecise – so if the amount of time between successive frames varied from 1/25th to 1/23rd of a second randomly… Chances are that you will not notice this unless the variation is extreme.

However, if the camera was the one with the slightly-varying frame rate, then you would not (for example) be able to use the film to accurately measure the speeds of passing cars because the time between photos would not be 1/24th of a second – it would be approximately 1/24th of a second with some error… The larger the error in the time between photos, the bigger the error in our measurement of how far the car travelled in 1/24th of a second.

If you have a turntable, you know that it is supposed to rotate at exactly 33 and 1/3 revolutions per second. However, it doesn’t. It slowly speeds up and slows down a little, resulting in a measurable effect called “wow”. It also varies in speed quickly, resulting in a similar measurable effect called “flutter”. This is the same as our slightly varying frame rate in the film projector or camera – it’s a varying distortion in time, either on the playback or the recording itself.

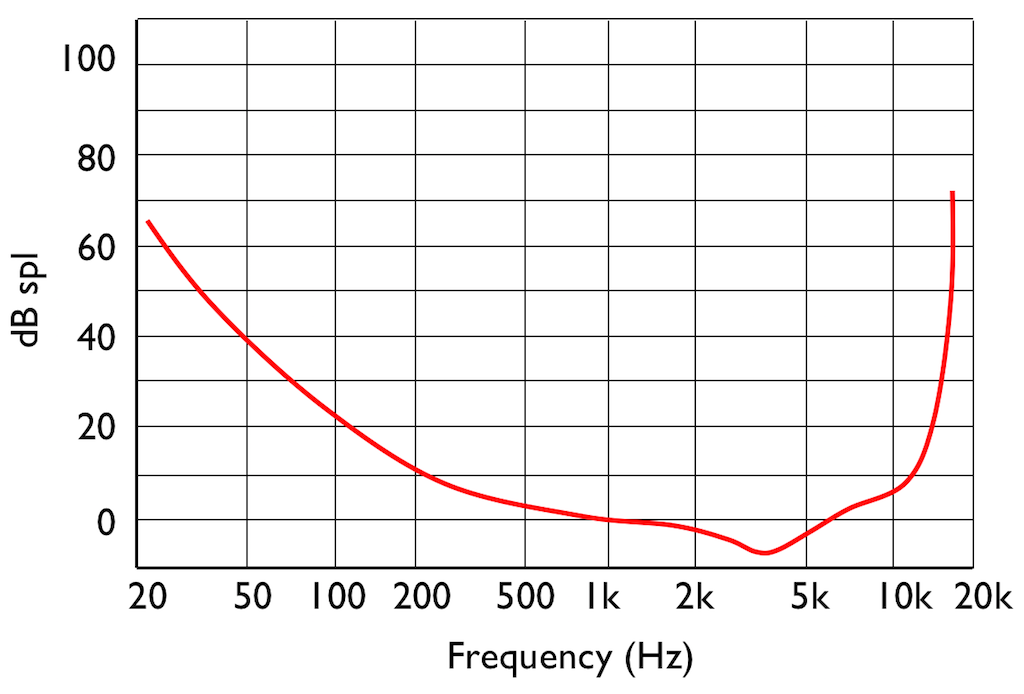

Digital audio has exactly the same problem. In theory, the sampling rate is constant, meaning that the time between successive samples is always the same. A compact disc is played with a sampling rate of 44100 Samples per Second (or 44.1 kHz), so, in theory, each sample comes 1/44100th of a second after the previous one. However, in practice, this amount of time varies slightly over time. If it changes slowly, then we call the error “wander” and if it changes quickly, we call it “jitter”.

“Wander” is digital audio’s version of “wow” and “jitter” is digital “flutter”.

Transmitting digital audio

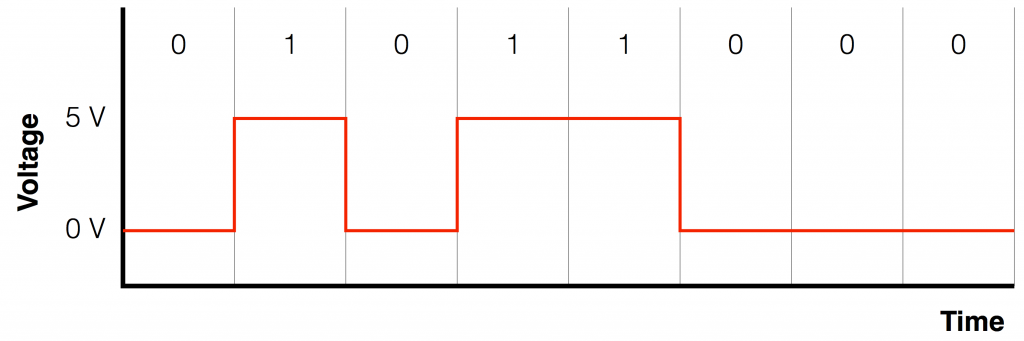

Without getting into any details at all, we can say that “digital audio” means that you have a representation of the audio signal using a stream of “1’s” and “0’s”. Let’s say that you wanted to transmit those 1’s and 0’s from one device to another device using an electrical connection. One way to do this is to use a system called “non-return-to-zero”. You pick two voltages, one high and one low (typically 0 V), and you make the voltage high if the value is a 1 and low if it’s a 0. An example of this is shown in the Figure below.

This protocol is easy to understand and easy to implement, but it has a drawback – the receiving device needs to know when to measure the incoming electrical signal, otherwise it might measure the wrong value. If the incoming signal was just alternating between 0 and 1 all the time (01010101010101010) then the receiver could figure out the timing – the “clock” from the signal itself by looking at the transitions between the low and high voltages. However, if you get a long string of 1’s or 0’s in a row, then the voltage stays high or low, and there are no transitions to give you a hint as to when the values are coming in…

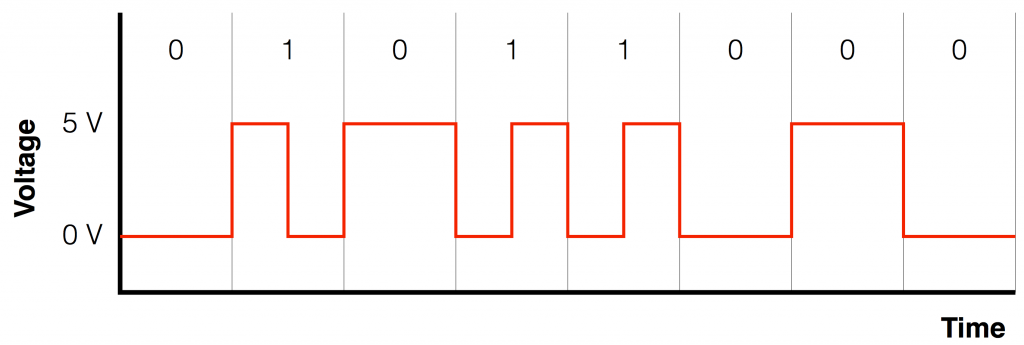

So, we need to make a simple system that not only contains the information, but can be used to deliver the timing information. So, we need to invent a protocol that indicates something different when the signal is a 1 than when it’s a 0 – but also has a voltage transition at every “bit” – every value. One way to do this is to say that, whenever the signal is a 1, we make an extra voltage transition. This strategy is called a “bi-phase mark”, an example of which is shown below in Figure 2.

Notice in Figure 2 that there is a voltage transition (either from low to high or from high to low) between each value (0 or 1), so the receiver can use this to known when to measure the voltage (a characteristic that is known as “self-clocking” because the clock information is built into the signal itself). A 0 can either be a low voltage or a high voltage – as long as it doesn’t change. A 1 is represented as a quick “high-low” or a “low-high”.

This is basically the way S-PDIF and AES/EBU work. Their voltage values are slightly different than the ones shown in Figure 2 – and there are many more small details to worry about – but the basic concept of a bi-phase mark is used in both transmission systems.

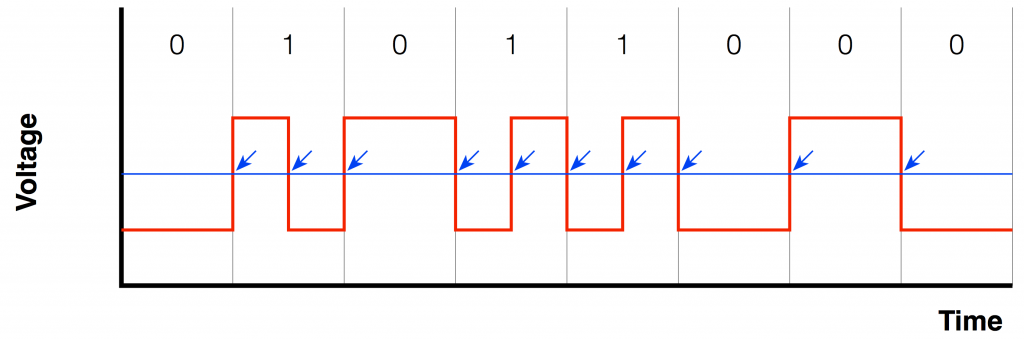

Detecting the clock

Let’s say that you’re given the task of detecting the clock in the signal shown in Figure 2, above. The simplest way to do this is to draw a line that is half-way between the low and the high voltages and detect when the voltage crosses that line, as shown in Figure 3.

The nice thing about this method is that you don’t need to know what the actual voltages are – you just pick a voltage that’s between the high and low values, call that your threshold – and if the signal crosses the threshold (in either direction) you call that an event.

Real-world square waves

So far so good. We have a digital audio signal, we can encode it as an electrical signal, we can transmit that signal over a wire to a device that can derive the clock (and therefore ultimately, the sampling rate) from the signal itself…

One small comment here: although the audio signal that we’re transmitting has been encoded as a digital representation (1’s and 0’s) the electrical signal that we’re using to transmit it is analogue. It’s just a change in voltage over time. In essence, the signal shown in Figure 2 is an analogue square wave with a frequency that is modulating between two values.

Now let’s start getting real. In order to create a square wave, you need to be able to transition from one voltage to another voltage instantaneously (this is very fast). This means that, in order to be truly square, the vertical lines in all of the above figures must be really vertical, and the corners must be 90º. In order for both of these things to be true, the circuitry that is creating the voltage signal must have an infinite bandwidth. In other words, it must have the ability to deliver a signal that extends from 0 Hz (or DC) up to ∞ Hz. If it doesn’t, then the square wave won’t really be square.

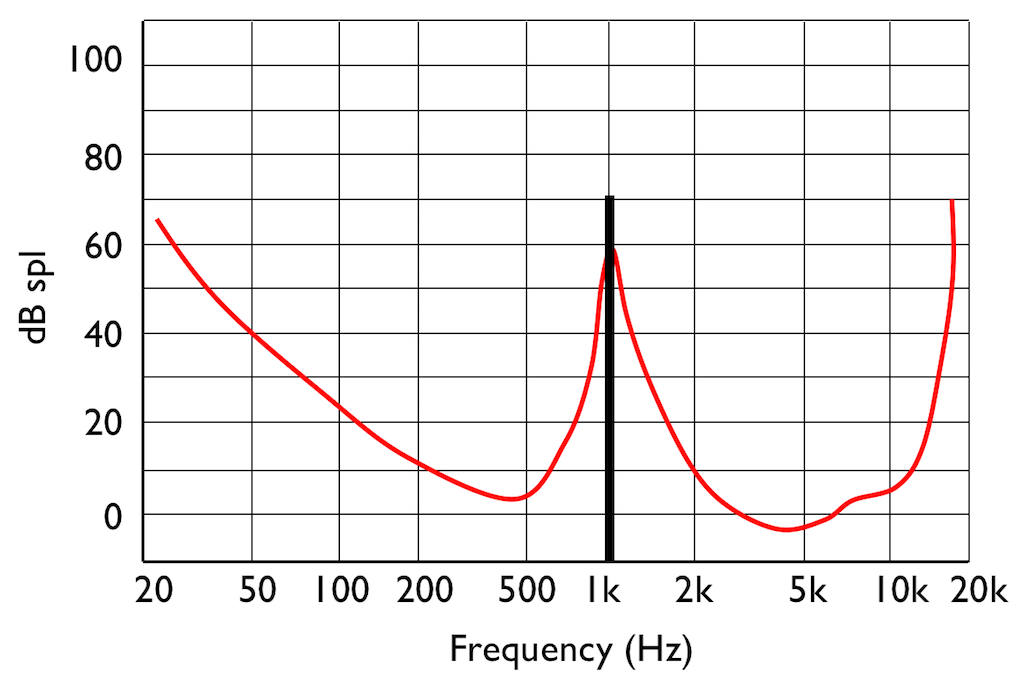

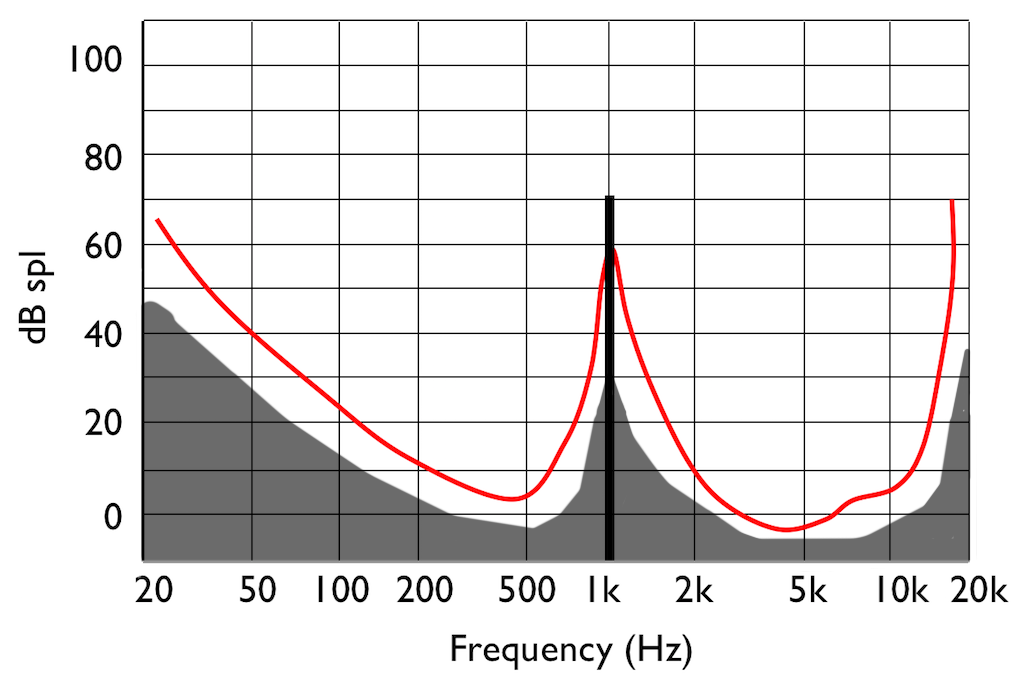

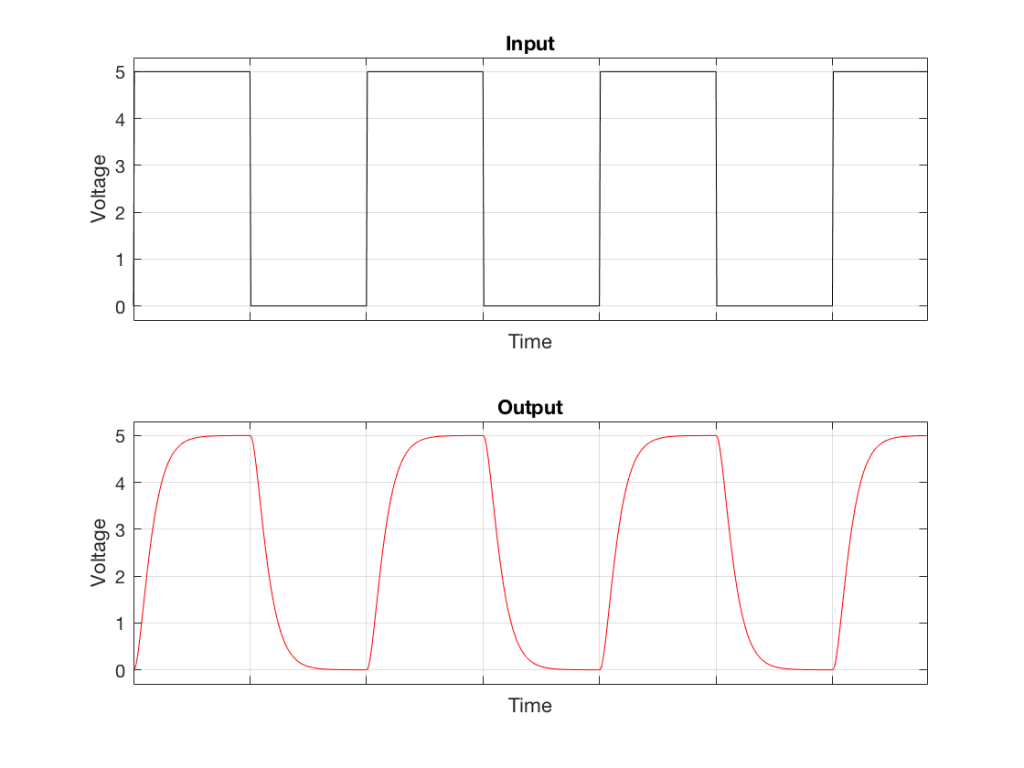

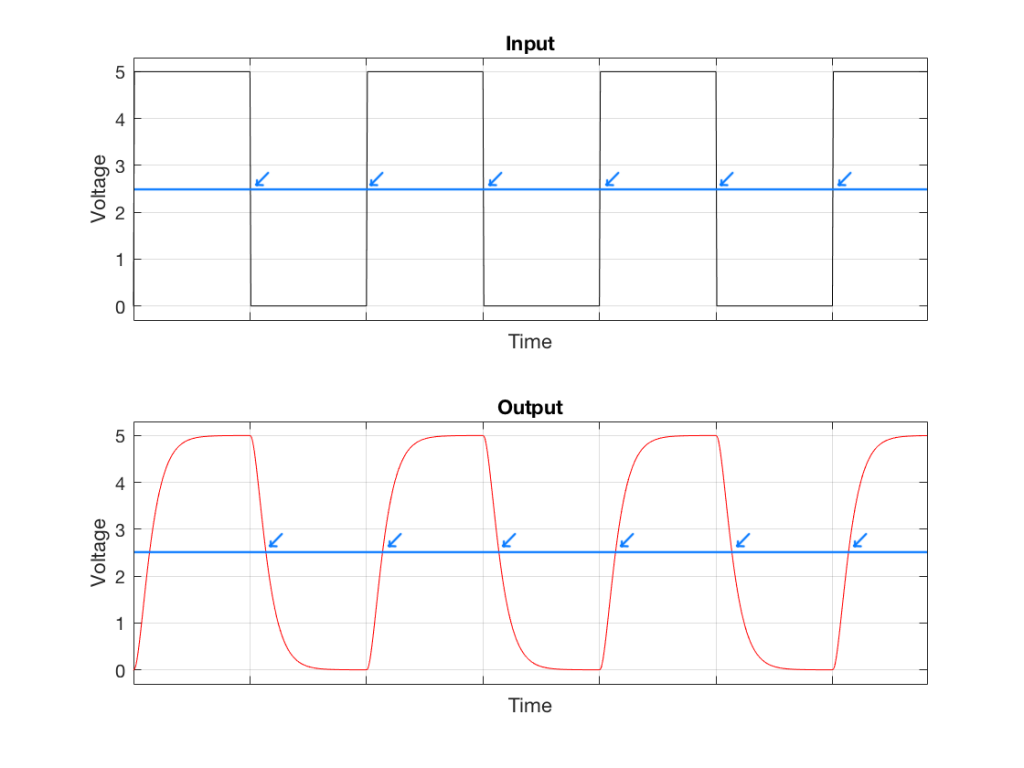

What happens if we try to transmit a square wave through a system that doesn’t extend all the way to ∞ Hz (in other words, “what happens if we filter the high frequencies out of a square wave? What does it look like?”) Figure 4, below shows an example of what we can expect in this case.

Note that Figure 4 is just an example… The exact shape of the output (the red curve) will be dependent on the relationship between the fundamental frequency of the square wave and the cutoff frequency of the low-pass filter – or, more accurately, the frequency response of the system.

What time is it there?

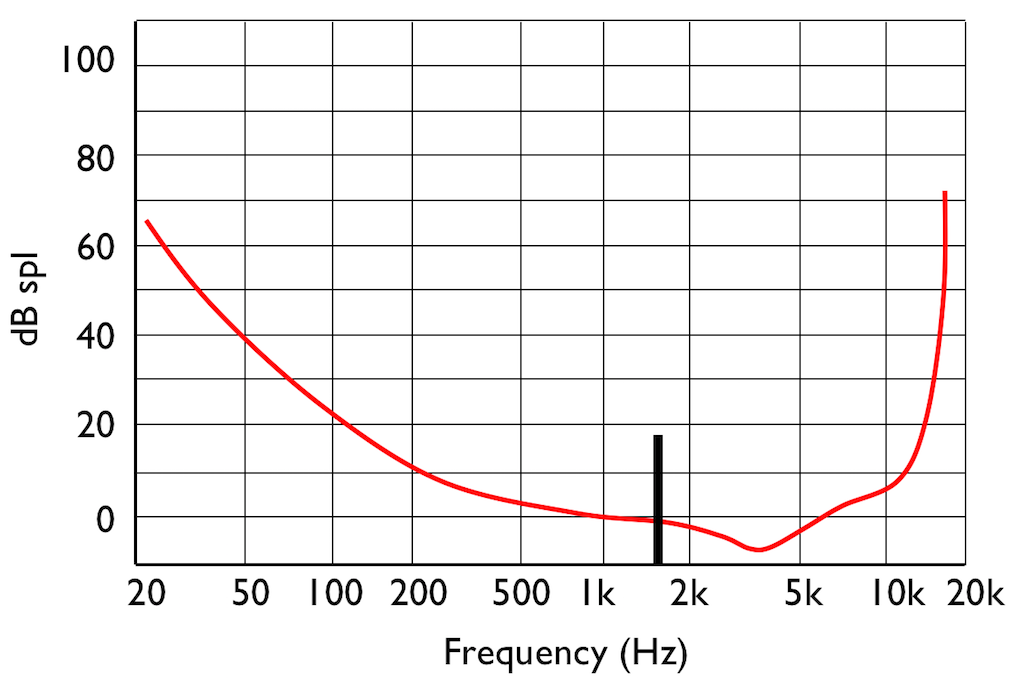

Look carefully at the two curves in Figure 4. The tick marks on the “Time” axes show the time that the voltage should transition from low to high or vice versa. If we were to use our simple method for detecting voltage transitions (shown in Figure 3) then it would be wrong…

As you can see in Figure 5, the system that detects the transition time is always late when the square wave is low-pass filtered. In this particular case, it’s always late by the same amount, so we aren’t too worried about it – but this won’t always be true…

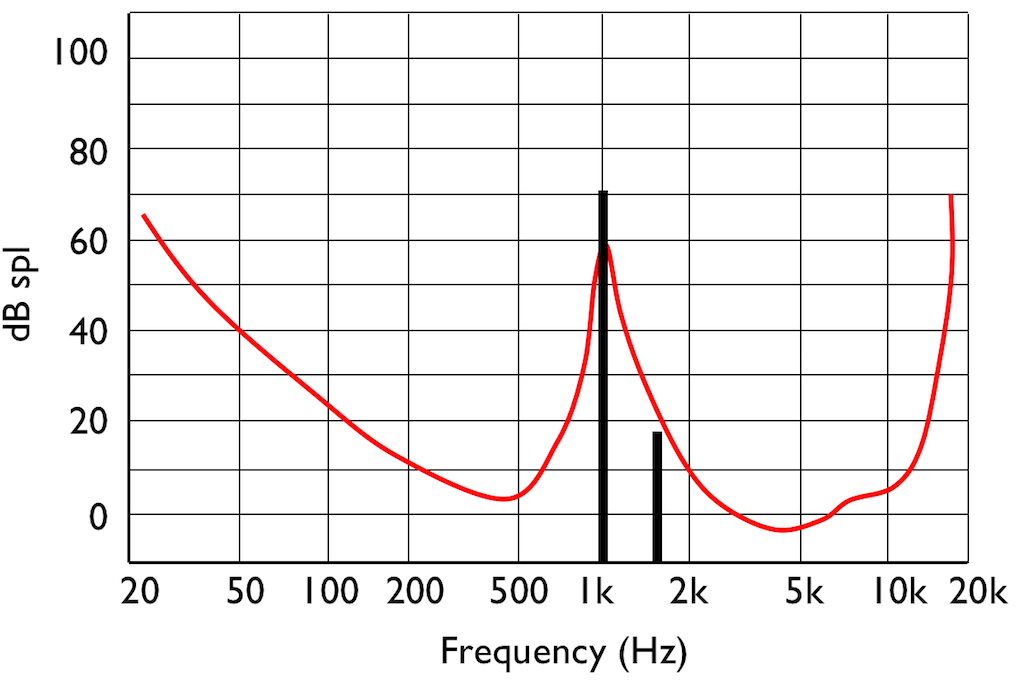

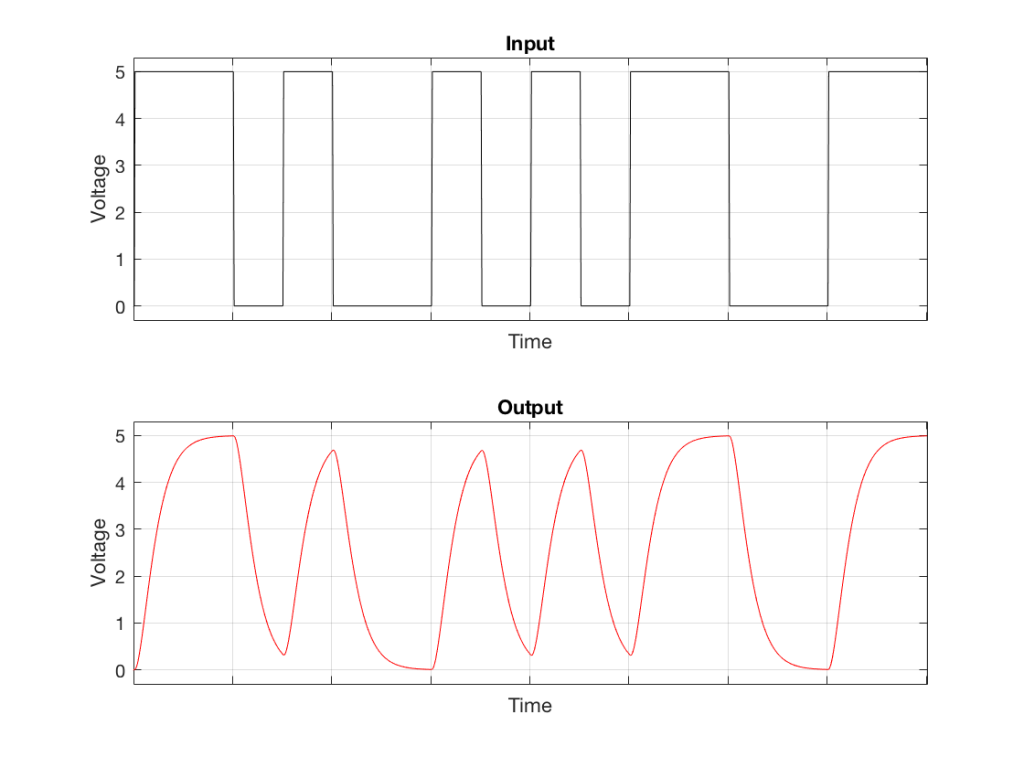

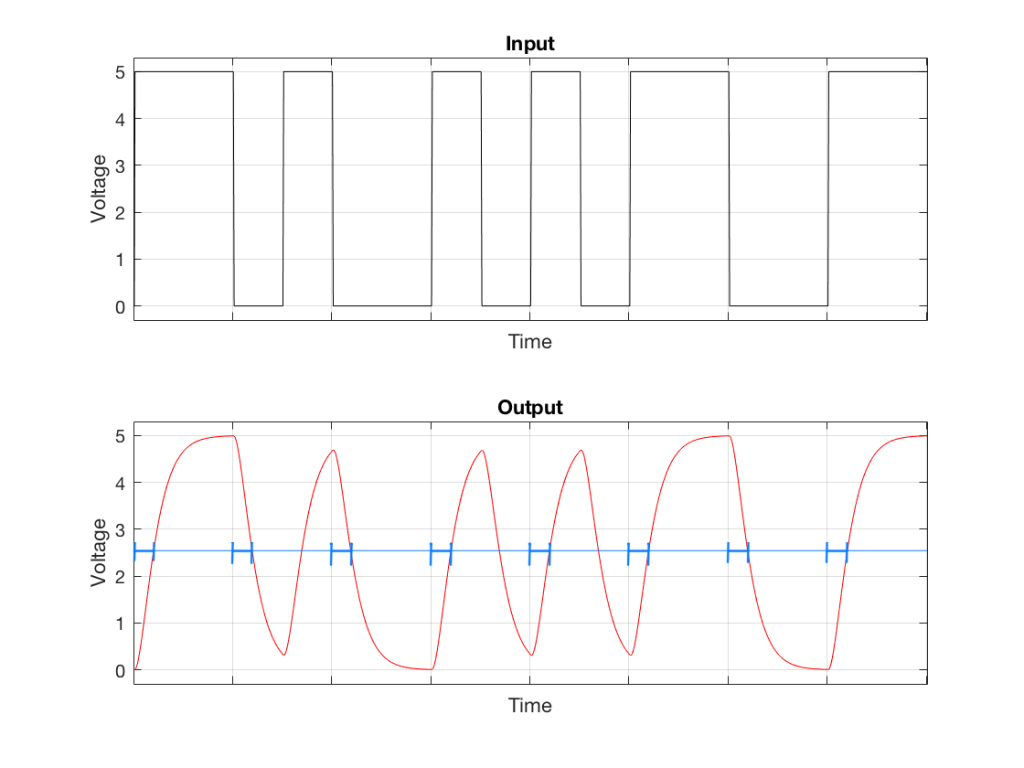

For example, what happens when the signal that we’re low-pass filtering is a bi-phase mark (which means that it’s a square wave with a modulated fundamental frequency) instead of a simple square wave with a fixed frequency?

As you can see in Figure 6, the low pass filter has a somewhat strange effect on the modulated square wave. Now, when the binary value is a “1”, and the square wave frequency is high, there isn’t enough time for the voltage to swing all the way to the new value before it has to turn around and go back the other way. Because it never quite gets to where it’s going (vertically) then there is a change in when it crosses our threshold of detection (horizontally), as is shown below.

The conclusion (for now)

- IF we were to make a digital audio transmission system AND

- IF that system used a biphase mark as its protocol AND

- IF transmission was significantly band-limited AND

- IF we were only using this simple method to derive the clock from the signal itself

- THEN the clock for our receiving device would be incorrect. It would not just be late, but it would vary over time – sometimes a little later than other times… And therefore, we have a system with wander and/or jitter.

It’s important for me to note that the example I’ve given here about how that jitter might come to be in the first place is just one version of reality. There are lots of types of jitter and lots of root causes of it – some of which I’ll explain in this series of postings.

In addition, there are times when you need to worry about it, and times when you don’t – no matter how bad it is. And then, there are the sneaky times when you think that you don’t need to worry about it, but you really do…