#77 in a series of articles about the technology behind Bang & Olufsen loudspeakers

I’m occasionally asked about the technical details of connecting Bang & Olufsen loudspeakers to third-party (non-B&O) sources. In the “old days”, this was slightly difficult due to connectors, adapters, and outputs. However, that was a long time ago – although beliefs often persist longer than facts…

All Bang & Olufsen “BeoLab” loudspeakers are “active”. At the simplest level, this means that the amplifiers are built-in. In addition, almost all of the BeoLab loudspeakers in the current portfolio use digital signal processing. This means that the filtering and crossovers are implemented using a built-in computer instead of using resistors, capacitors, and inductors. This will be a little important later in this posting.

In order to talk about the compatibility issues surrounding the loudspeakers in the BeoLab portfolio – both with themselves and with other loudspeakers, we really need to break the discussion into two areas. The first is that of connectors and signals. The second, more problematic issue is that of “latency” (which is explained below…)

Connectors and signals

Since BeoLab loudspeakers have the amplifiers built-in, you need to connect them to an analogue “line level” signal instead of the output of an amplifier.

This means that, if you have a stereo preamplifier, then you just connect the “volume-regulated” Line Output of the preamp to the RCA line inputs of the BeoLab loudspeakers. (Note that the BeoLab 3 does not have a built-in RCA connector, so you need an adapter for this). Since the BeoLab loudspeakers (except for BeoLab 5, 50, and 90) are fixed at “full volume”, then you need to ensure that your Line Output of the source is, indeed, volume-regulated. If not, things will be surprisingly loud…

In addition to the RCA Line inputs, most BeoLab loudspeakers also have at least one digital audio input. The BeoLab 5 has an S/P-DIF “coaxial” input. The BeoLab 17, 18, and 20 have optical digital inputs. The BeoLab 50 and 90 have many options to choose from. Again, apart from the BeoLab 5, 50, and 90, the loudspeakers are fixed at “full volume”, so if you are going to use the digital input for the BeoLab 17, 18, or 20, you will need to enable the volume regulation of the digital output of your source, if that’s possible.

Latency

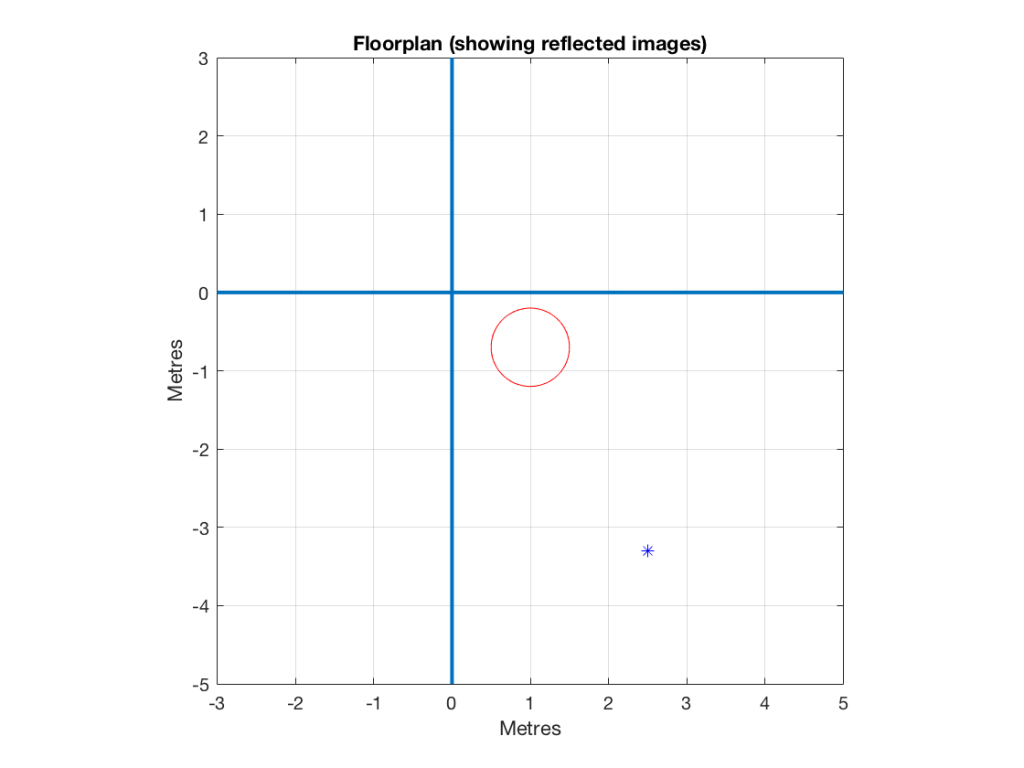

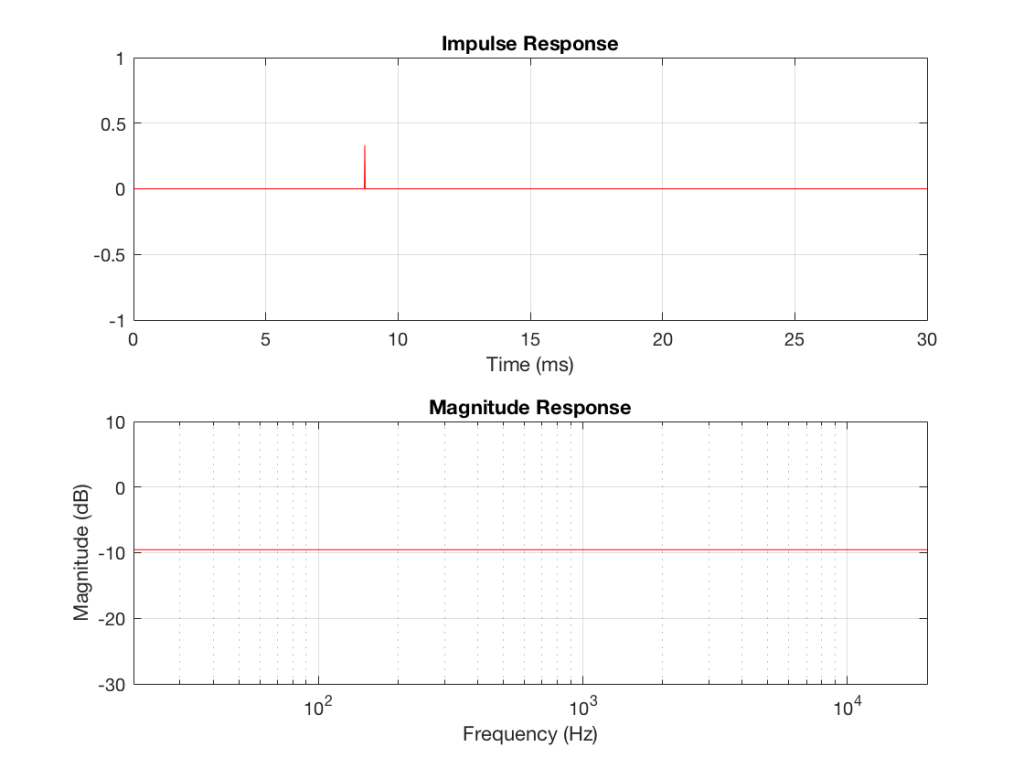

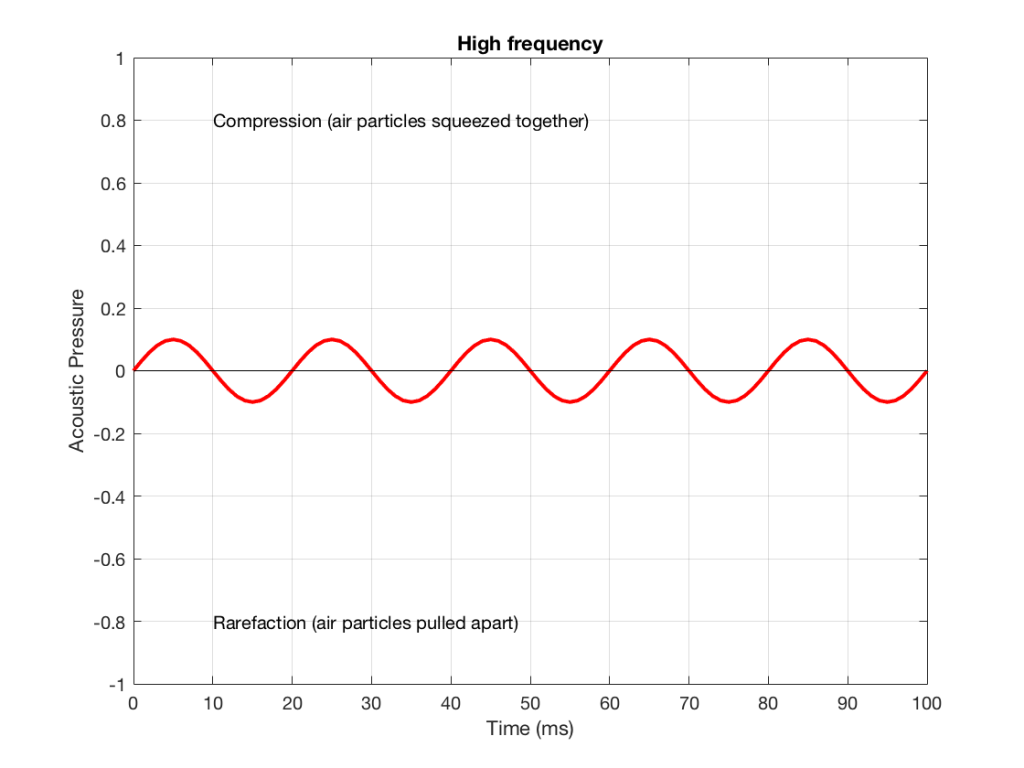

Any audio device has some inherent “latency” or “delay from the time the signal comes in until it goes out”. For some devices, this latency can be so low that we can think of it as being 0 seconds. In other words, for some devices (say, a wire, for example) the signal comes out at the same time as it comes in (as far as we’re concerned… I’m not going to get into an argument about the speed of electricity or light, since these go very fast…)

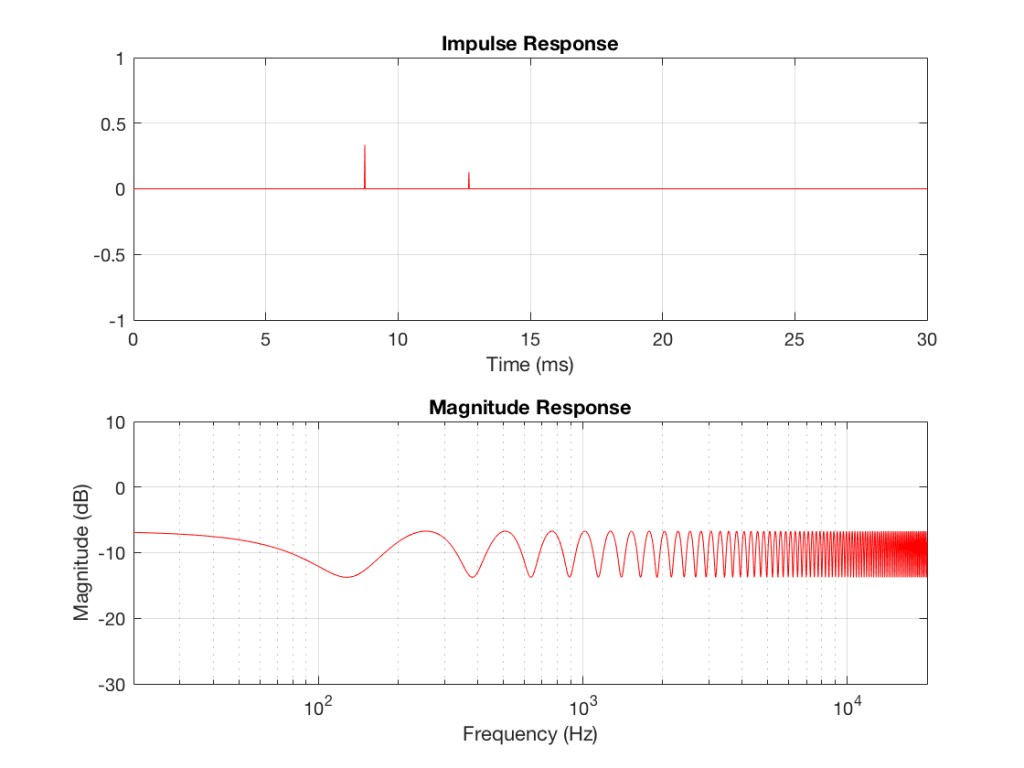

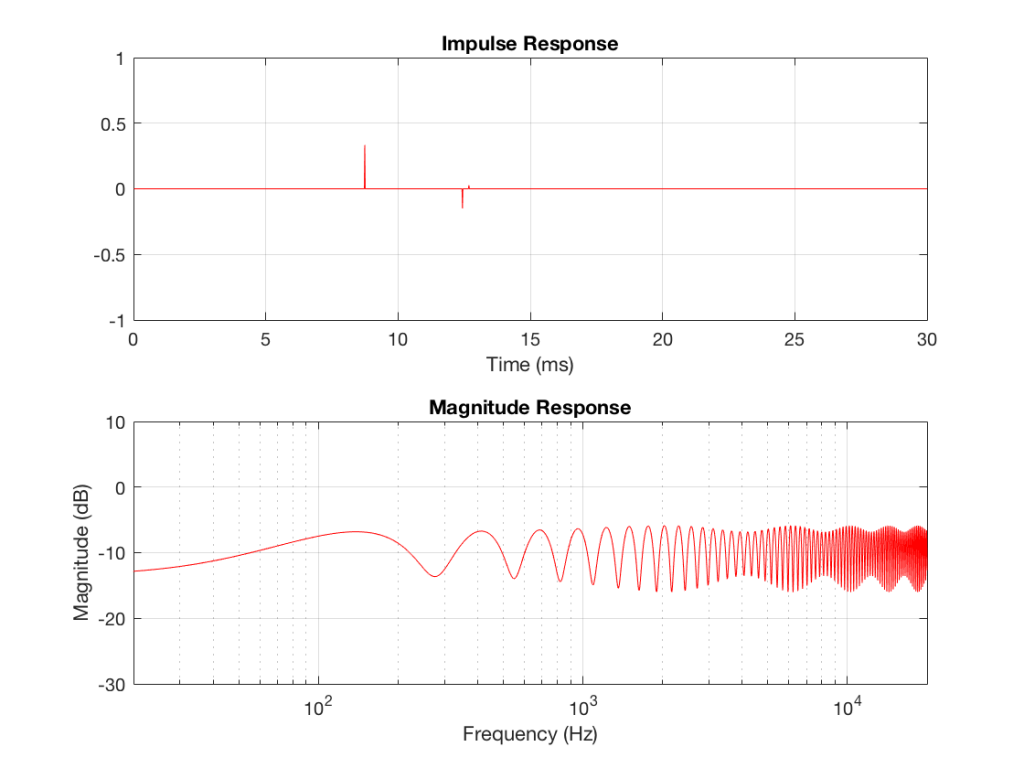

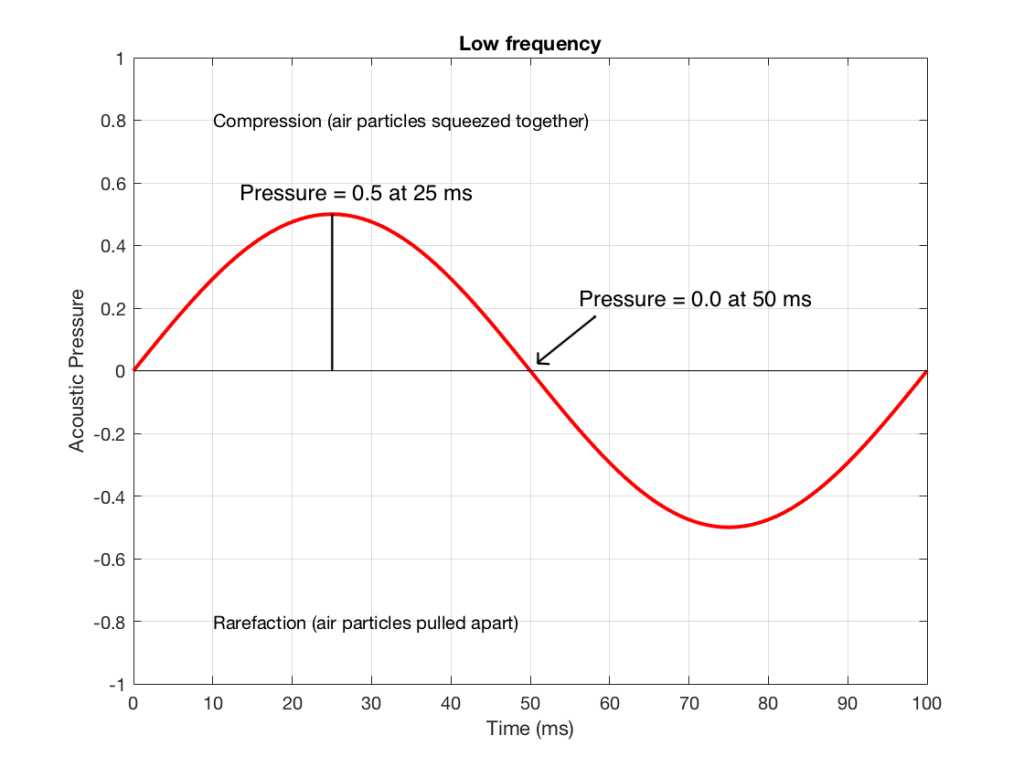

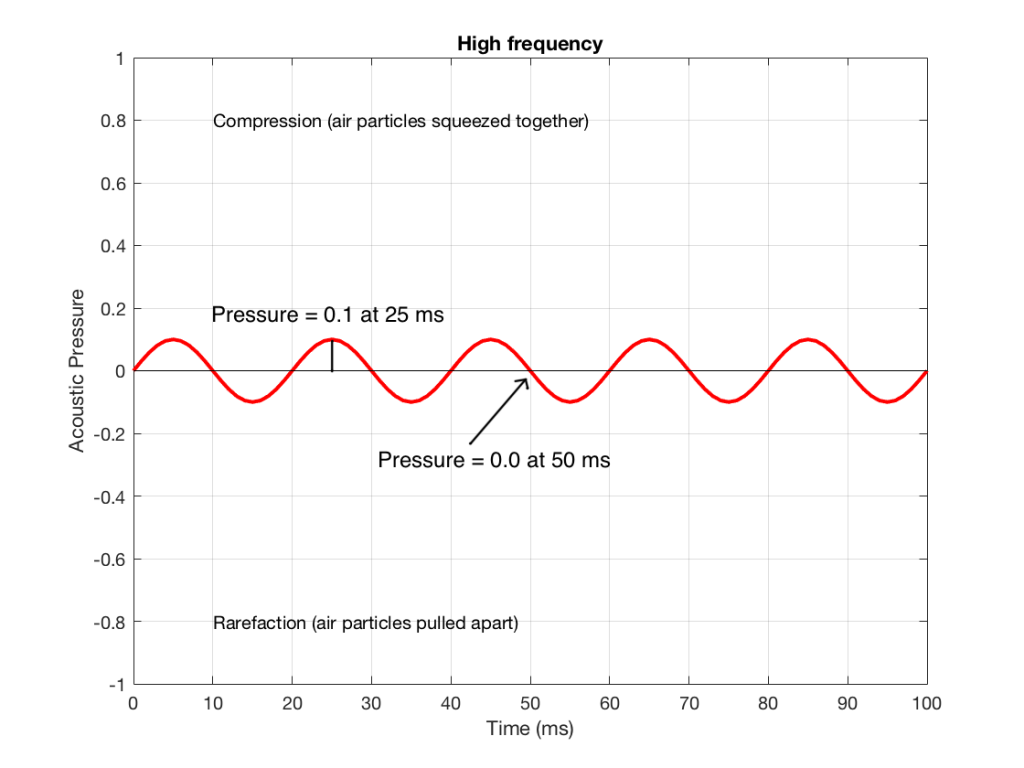

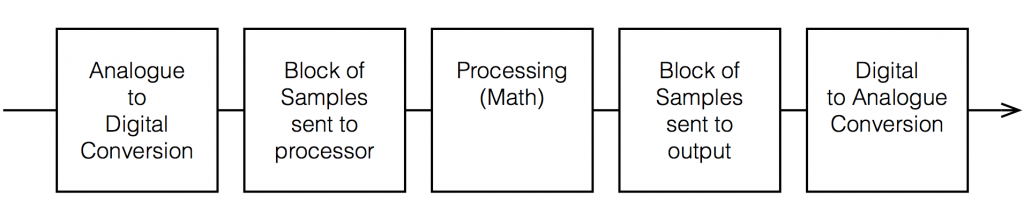

Any audio device that uses digital signal processing has some measurable (and possibly audible) latency. This is primarily due to 5 things, seen in the flowchart below.

Each of these 5 steps each have different amounts of latency – some of them very, very small. Some are bigger. One thing to know about digital signal processing is that, typically, in order to make the math more efficient (and therefore squeeze as much as possible out of the computing power), the samples are processed in “blocks” – not one-by-one. So, the signal comes into the input, it gets converted to individual samples, and those samples are collected into a block of 64 samples (for example) before being sent to the processing.

So, let’s say that you have a sampling rate of 44100 samples per second, and a block size of 64 samples. This then means that you send a block to the processor every 64 * 1/44100 = 1.45 ms. That block gets processed (which takes some time), and then sent as another block of 64 samples to the DAC (digital to analogue converter).

So, ignoring the latency of the conversion from- and to-analogue, in the example above, it will take 1.45 ms to get the signal into the processor, you have a 1.45 time window to do the processing, and it will take another 1.45 ms to get the signal out to the DAC. This is a total of 4.35 ms from the instant a signal gets comes into the analogue input to the moment it comes out the analogue output.

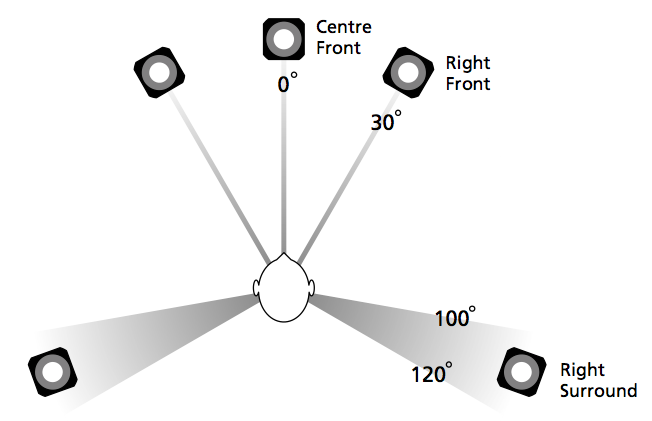

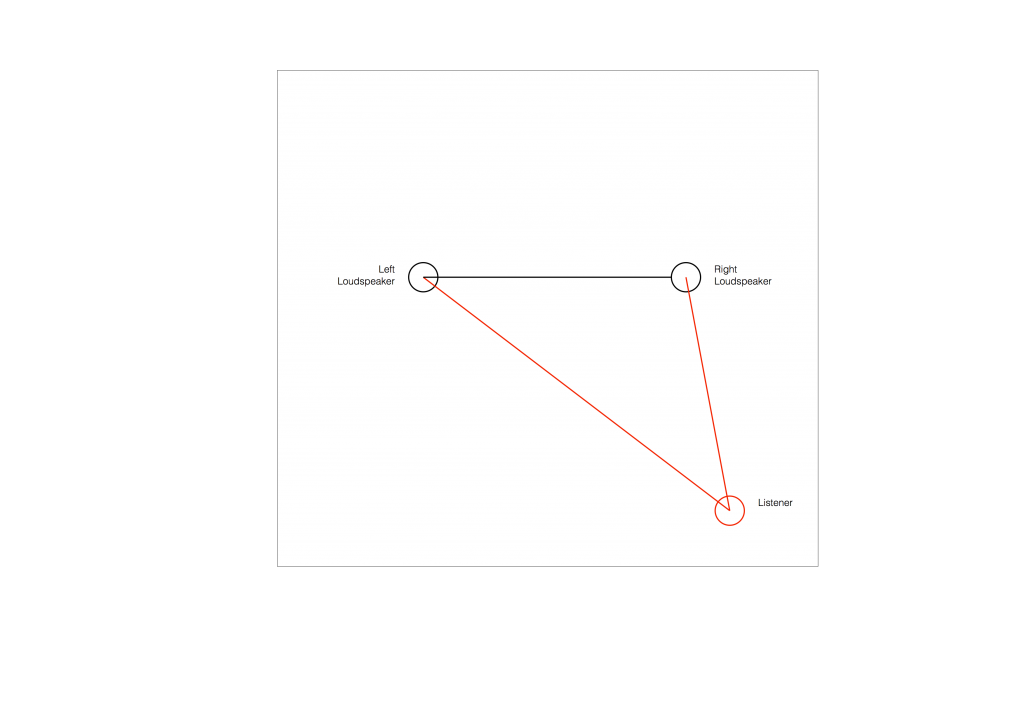

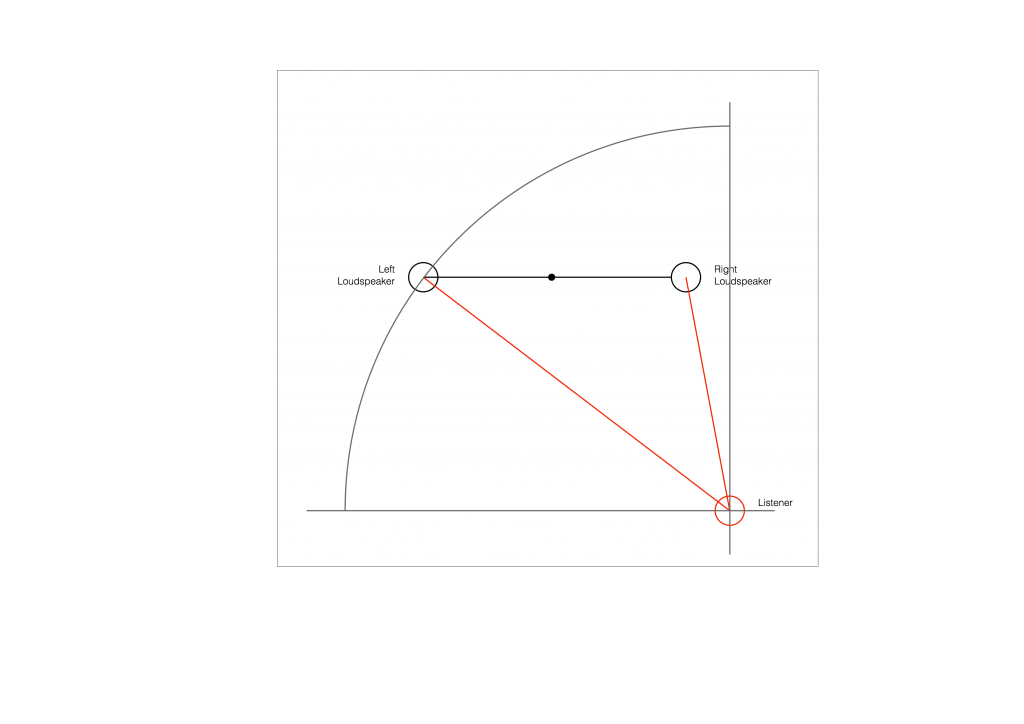

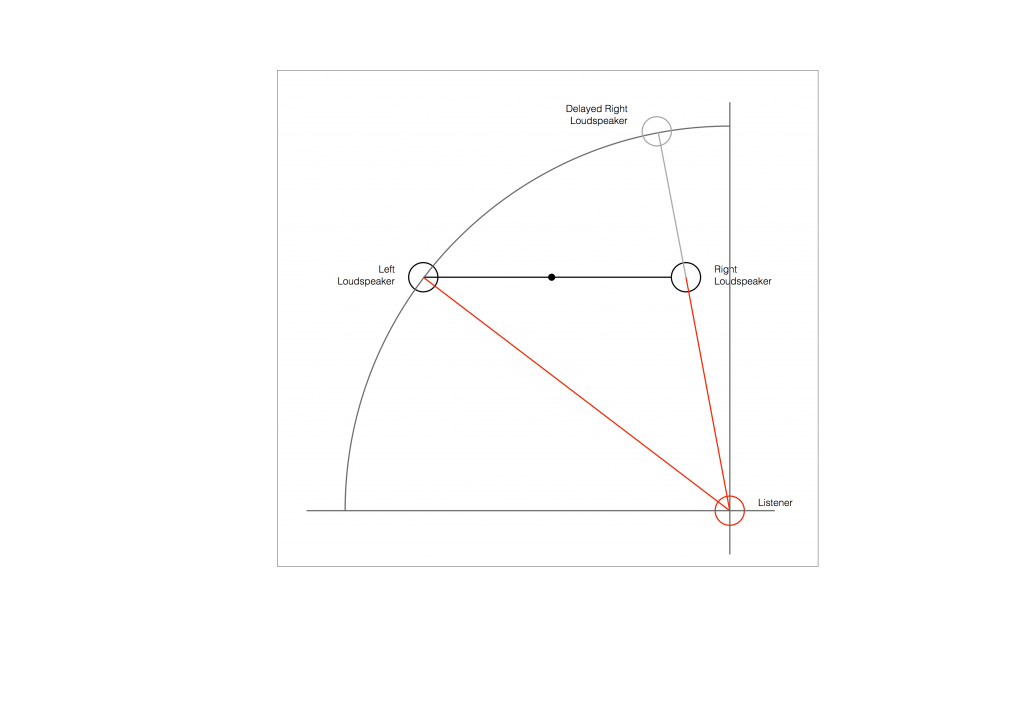

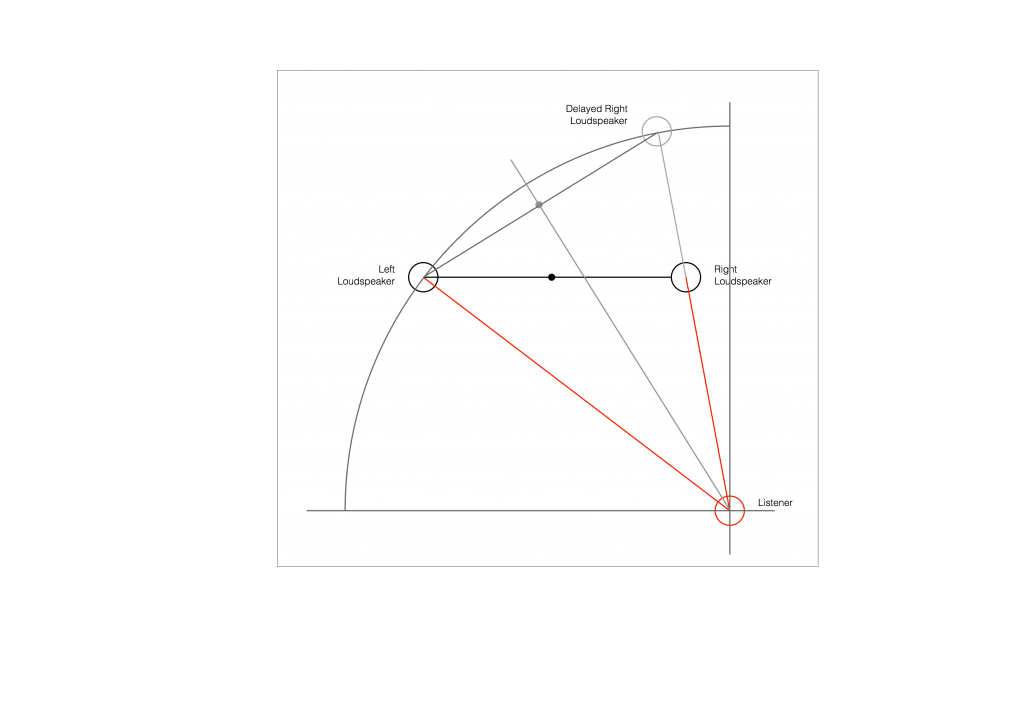

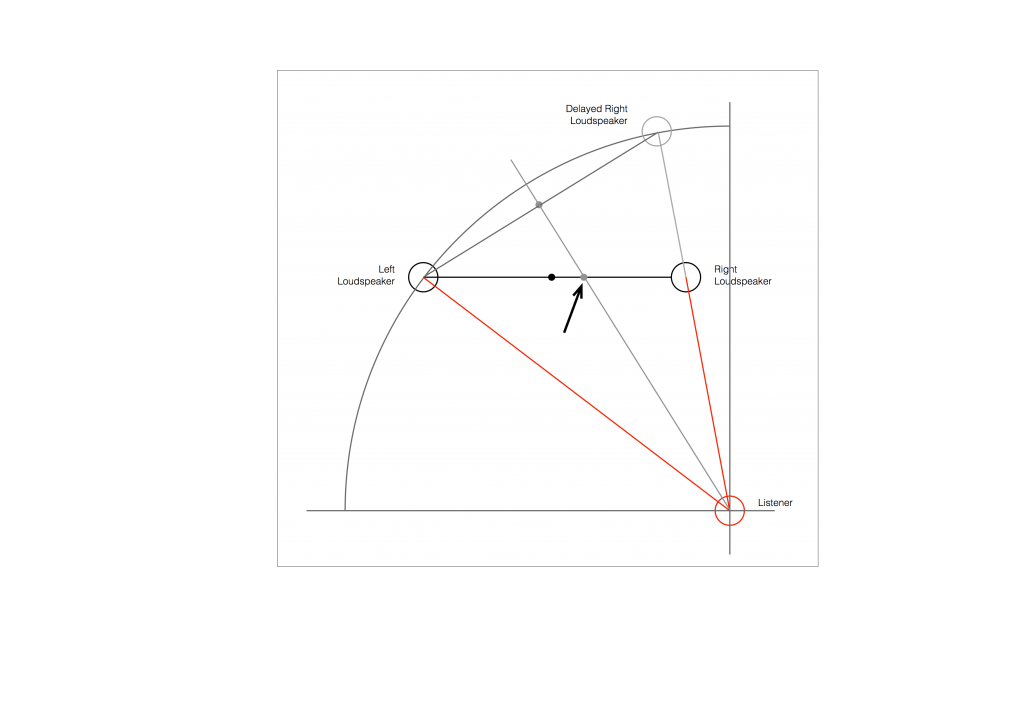

Sidebar: Of course, 4.3 ms is not a long time. If you had a loudspeaker outdoors, then adding 4.35 ms to its latency would be same delay you would incur by moving 1.5 m (or about 4.9 feet) further away. However, in terms of a stereo or multichannel audio system, 4.35 ms is an eternity. For example, if you have a correctly-configured stereo loudspeakers (with each loudspeaker 30º from centre-front, and you’re sitting in the “sweet spot”, if you delay the left loudspeaker by just 0.2 ms, then lead vocals in your pop tunes will move 10º to the right instead of being in the centre. It only takes 1.12 ms of delay in one loudspeaker to move things all the way to the opposite side. In a multichannel loudspeaker configuration (or in headphones), some of the loudspeaker pairs (e.g. Left Surround – Right Surround) result in you being even more sensitive to these so-called “inter-channel delay differences”.

Also, the amount of time required by the processing depends on what kind of processing you’re doing. In the case of BeoLab 50 and 90, for example, we are using FIR filters as part of the directivity (Beam Width and Beam Direction) processing. Since this filtering extends quite low in frequency, the FIR filters are quite long – and therefore they require extra latency. To add a small amount of confusion to this discussion (as we’ll see below) this latency is switchable to be either 25 ms or 100 ms. If you want Beam Width control to extend as low in frequency as possible, you need to use the 100 ms “Long Latency” mode. However, if you need lip-synch with a non-B&O source, you should use the 25 ms “Low Latency” mode (with the consequent loss of directivity control at very low frequencies).

Latency in BeoLab loudspeakers

In order to use BeoLab loudspeakers with a non-B&O source (or an older B&O source) , you may need to know (and compensate for) the latency of the loudspeakers in your system. This is particularly true if you are “mixing and matching” loudspeakers: for example, using different loudspeaker models (or other brands – *gasp*) in a single multichannel configuration.

| Model | A / D | Latency (ms) | Equivalent in m | Volume Regulation? |

| Unknown analogue | A | 0 | 0 | No |

| Beolab 1 | A | 0 | 0 | No |

| Beolab 2 | A | 0 | 0 | No |

| Beolab 3 | A | 0 | 0 | No |

| Beolab 4 | A | 0 | 0 | No |

| Beolab 5 | D | 3.92 | 1.35 | Yes |

| Beolab 7 series | A | 0 | 0 | No |

| Beolab 9 | A | 0 | 0 | No |

| Beolab 12 series | D | 4.4 | 1.51 | No |

| Beolab 17 | D | 4.4 | 1.51 | No |

| Beolab 18 | D | 4.4 | 1.51 | No |

| Beolab 19 | D | 4.4 | 1.51 | No |

| Beolab 20 | D | 4.4 | 1.51 | No |

| Beolab 50 | D | 25 / 100 | 8.6 / 34.4 | Yes |

| Beolab 90 | D | 29 / 100 | 10.0 / 34.4 | Yes |

How to Do It

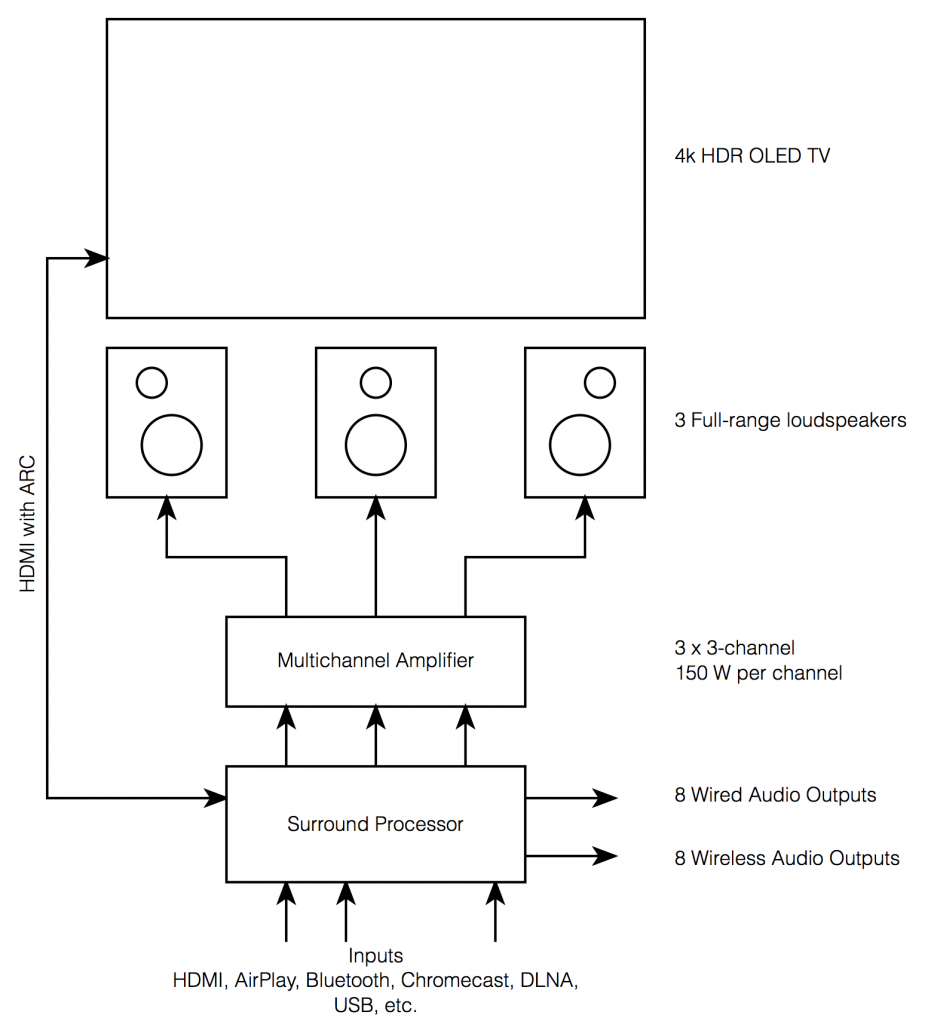

I’m going to make two assumptions for the rest of this posting:

- you have a stereo preamp or a surround processor / AVR that has a “Speaker Distance” or “Speaker Delay” adjustment parameter (measured from the loudspeaker location to the listening position)

- it does not have a “loudspeaker latency” adjustment parameter

The simple version (that probably won’t work):

Since the latency of the various loudspeakers can be “translated” into a distance, and since AVR’s typically have a “Speaker Distance” parameter, you simply have to add the equivalent distance of the loudspeaker’s latency to the actual distance to the loudspeaker when you enter it in the menus.

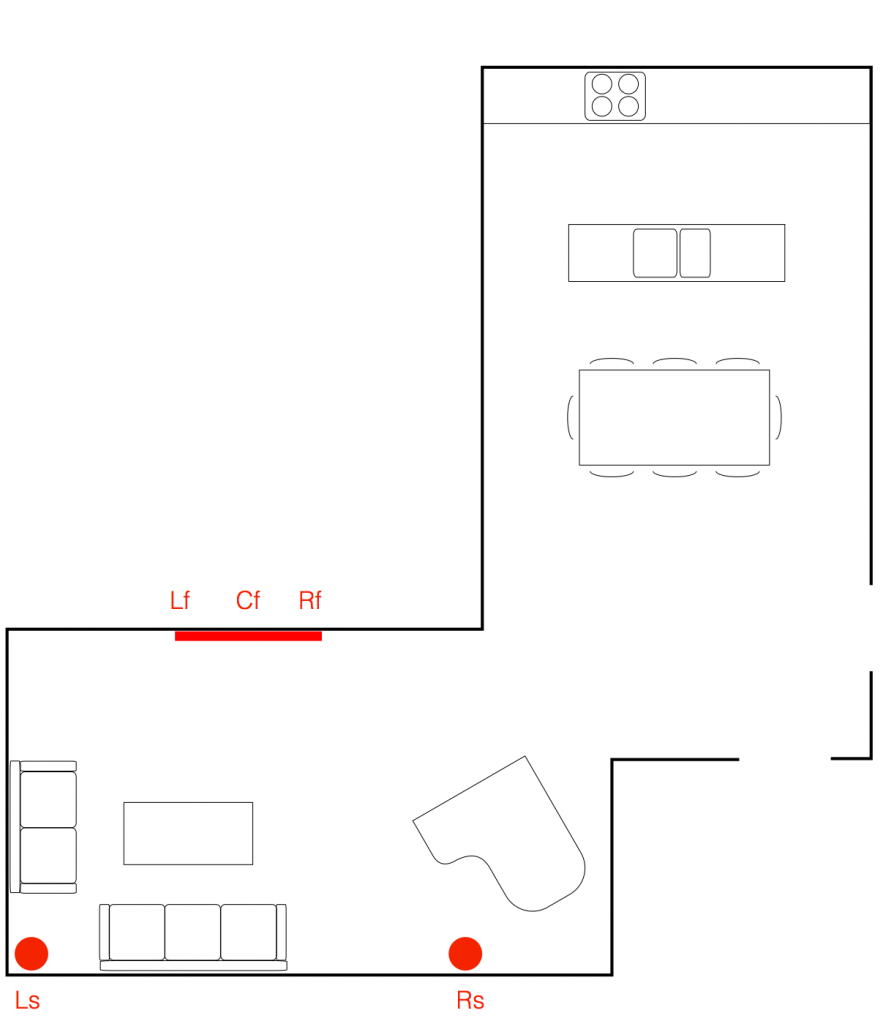

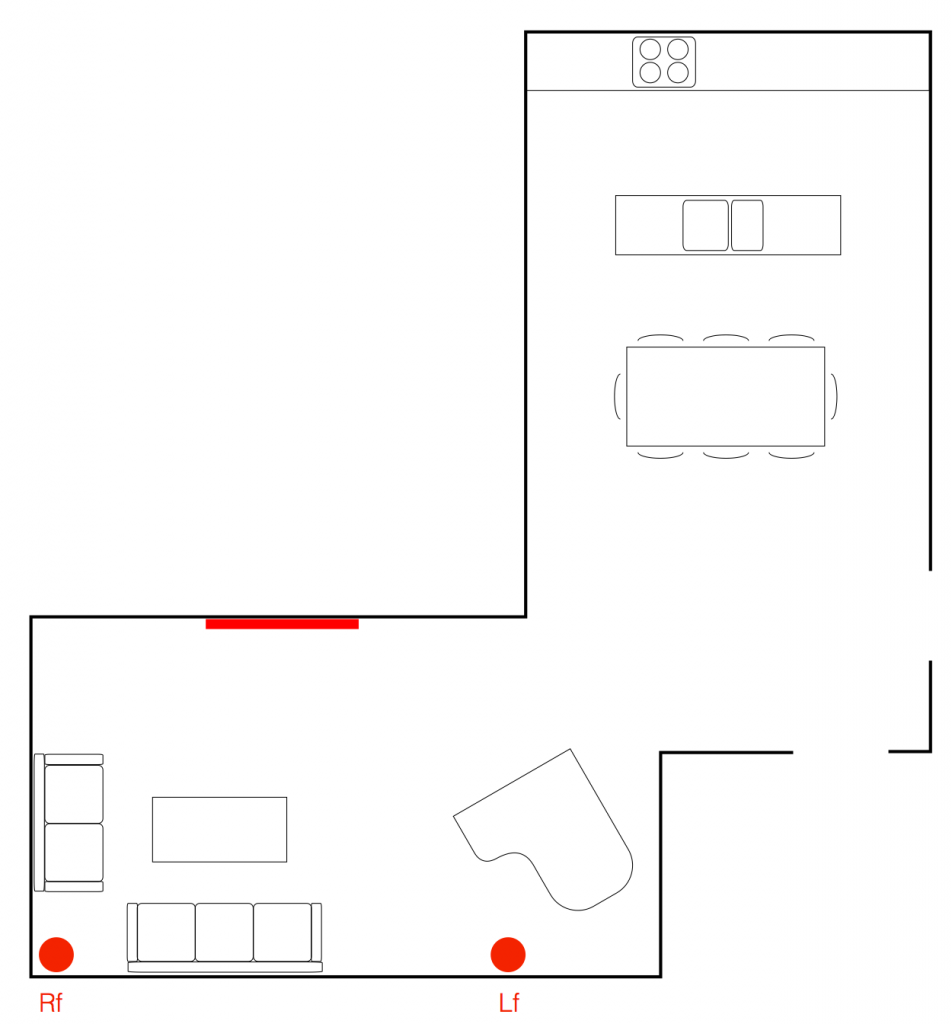

For example, let’s say that you have a 5.0 channel loudspeaker configuration with the following actual speaker distances, measured in the room.

| Channel | Model | Distance |

| Left Front | Beolab 5 | 3.7 |

| Right Front | Beolab 5 | 3.9 |

| Centre Front | Beolab 3 | 3.9 |

| Left Surround | Beolab 17 | 1.6 |

| Right Surround | Beolab 17 | 3.2 |

You then look up the equivalent distances in the first table and add the appropriate number to each loudspeaker.

| Channel | Model | Distance | + | Latency Equivalent | = | Total |

| Left Front | Beolab 5 | 3.7 | + | 1.35 | = | 5.05 |

| Right Front | Beolab 5 | 3.9 | + | 1.35 | = | 5.25 |

| Centre Front | Beolab 3 | 3.9 | + | 0 | = | 3.9 |

| Left Surround | Beolab 17 | 1.6 | + | 1.51 | = | 3.11 |

| Right Surround | Beolab 17 | 3.2 | + | 1.51 | = | 4.71 |

This technique will work fine unless the total distance that you have to enter in the AVR’s menus is greater than its maximum possible value (which is typically 10.0 m on most brands and models that I’ve seen – although there are exceptions).

So, what do you do if your AVR can’t handle a value that’s high enough? Then you need to fiddle with the numbers a bit…

The slightly-more complicated version (which might work most of the time)

When you enter the Speaker Distances in the menus of your AVR, you’re doing two things:

- calibrating the delay compensation for the differences in the distances from the listening position to the individual loudspeakers

- (maybe) calibrating the system to ensure that the sound arrives at the listening position at the same time as the video is displayed on the screen (therefore sending the sound out early, since it takes longer for the sound to travel to the sofa than it takes the light to get from your screen…)

That second one has a “maybe” in front of it for a couple of reasons:

- this is a very small effect, and might have been decided by the manufacturer to be not worth the effort

- the manufacturer of an AVR has no way of knowing the latency of the screen to which it’s attached. So, it’s possible that, by outputting the sound earlier (to compensate for the propagation delay of the sound) it’s actually making things worse (because the screen is delayed, but the AVR doesn’t know it…)

So, let’s forget about that lip-synch issue and stick with the “delay compensation for the differences in the distances” issue. Notice that I have now highlighted the word “differences” in italics twice… this is important.

The big reason for entering Speaker Distances is that you want the a sound that comes out of all loudspeakers simultaneously to reach the listening position simultaneously. This means that the closer loudspeakers have to wait for the further loudspeakers (by adding an appropriate delay to their signal path). However, if we ignore the synchronisation to another signal (specifically, the lips on the screen), then we don’t need to know the actual (or “absolute”) distance to the loudspeakers – we only need to know their differences (or “relative distances”). This means that you can consider the closest loudspeaker to have a distance of 0 m from the listening position, and you can subtract that distance from the other distances.

For example, using the table above, we could subtract the distance to the closest loudspeaker (the Left Surround loudspeaker, with a distance of 1.6 m) from all of the loudspeakers in the table, resulting in the table below.

| Channel | Model | Distance | – | Closest | = | Result |

| Left Front | Beolab 5 | 3.7 | – | 1.6 | = | 2.1 |

| Right Front | Beolab 5 | 3.9 | – | 1.6 | = | 2.3 |

| Centre Front | Beolab 3 | 3.9 | – | 1.6 | = | 2.3 |

| Left Surround | Beolab 17 | 1.6 | – | 1.6 | = | 0 |

| Right Surround | Beolab 17 | 3.2 | – | 1.6 | = | 1.6 |

Again, you look up the equivalent distances in the first table and add the appropriate number to each loudspeaker.

| Channel | Model | Distance | + | Latency equivalent | = | Total |

| Left Front | Beolab 5 | 2.1 | + | 1.35 | = | 3.45 |

| Right Front | Beolab 5 | 2.3 | + | 1.35 | = | 3.65 |

| Centre Front | Beolab 3 | 2.3 | + | 0 | = | 2.3 |

| Left Surround | Beolab 17 | 0 | + | 1.51 | = | 1.51 |

| Right Surround | Beolab 17 | 1.6 | + | 1.51 | = | 3.11 |

As you can see in Table 5, the end results are smaller than those in Table 3 – which will help if your AVR can’t get to a high enough value for the Speaker Distance.

The only-slightly-even-more complicated version (which has a better chance of working most of the time)

Of course, the version I just described above only subtracted the smallest distance from the other distances, however, we could do this slightly differently and subtract the smallest total (actual + equivalent distance) from the totals to “force” one of the values to 0 m. This can be done as follows:

Starting with a copy of Table 3, we get a preliminary Total, and then subtract the smallest of these from all value to get our Final Speaker Distance.

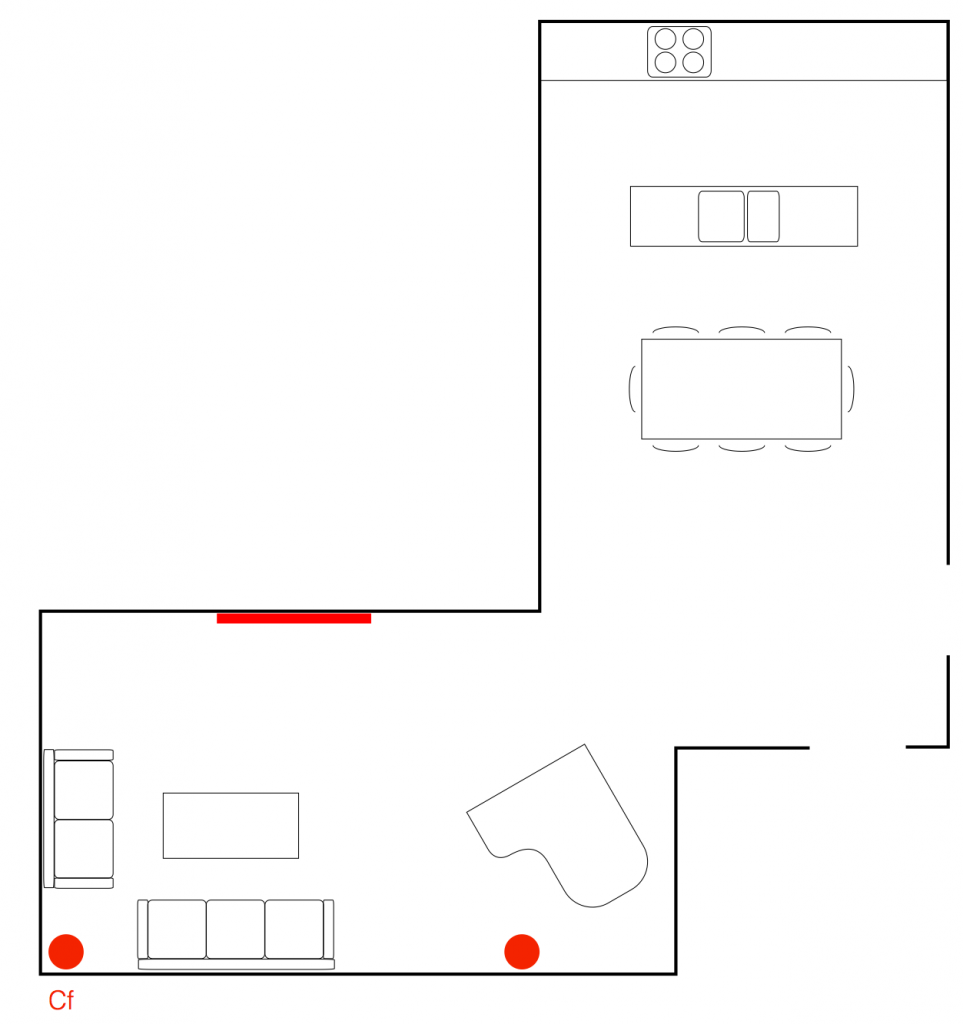

| Channel | Model | Distance (m) | + | Latency (m) | = | Total | – | Smallest | = | Final |

| Lf | BL 5 | 3.7 | + | 1.35 | = | 5.05 | – | 3.11 | = | 1.94 |

| Rf | BL 5 | 3.9 | + | 1.35 | = | 5.25 | – | 3.11 | = | 2.14 |

| Cf | BL 3 | 3.9 | + | 0 | = | 3.9 | – | 3.11 | = | 0.79 |

| Ls | BL17 | 1.6 | + | 1.51 | = | 3.11 | – | 3.11 | = | 0 |

| Rs | BL 17 | 3.2 | + | 1.51 | = | 4.71 | – | 3.11 | = | 1.6 |

Of course, if you do it the first way (as shown in Table 3) and the values are within the limits of your AVR, then you don’t need to get complicated and start subtracting. And, in many cases, if you don’t own BeoLab 50 or 90, and you don’t live in a mansion, then this will probably be okay. However… if you DO own BeoLab 50 or 90, and/or you do live in a mansion, then you should probably get used to subtracting…

Some additional information about BeoLab 50 & 90

As I mentioned above, the BeoLab 50 and BeoLab 90 have two latency options. The “High Latency” option (100 ms) allows us to implement FIR filters that control the directivity (the Beam Width and Beam Directivity) to as low a frequency as possible. However, in this mode, the latency is so high that you will notice that the sound is behind the picture if you have a non-B&O television.* In other words, you will not have “lip-synch”.

For customers with a non-B&O television*, we have included a “Low Latency” option (25 ms) which is within the tolerable limits of lip-synch. In this mode, we are still controlling the directivity of the loudspeaker with an FIR, but it cannot go as low in frequency as the “High Latency” option.

As I mentioned above, a 100 ms latency in a loudspeaker is equivalent to placing it 34.4 m further away (ignoring the obvious implications on the speaker level). If you have a third-part source such as an AVR, it is highly unlikely that you can set a Speaker Distance in the menus to be the actual distance + 34.4 m…

So, in the case of BeoLab 50 or 90, you should manually set the Latency Mode to “Low Latency” (using the setup options in the speaker’s app). This then means that you should add “only” 8.6 m to the actual distance to the loudspeaker.

Of course, if you are using the BeoLab 50 or 90 alone (meaning that there is no video signal, and no other loudspeakers that need time-alignment) then this is irrelevant, and you can just set the Speaker Distance to 0 m. You can also change the loudspeakers to another preset (that you or your installer set up) that uses the High Latency mode for best performance.

Instructions on how to do this are found in the Technical Sound Guide for the BeoLab 50 or the BeoLab 90 via the Bang & Olufsen website at www.bang-olufsen.com.

* Here a “B&O Television” means a BeoPlay V1, BeoVision 11, 14, Avant, Avant NG, Horizon, or Eclipse. Older B&O televisions are different… This will be discussed in the next blog posting.