Category: audio

Slow-motion Vinyl

Voices in the Wire

CHECK YOUR PREFERENCES! (was: “Garbage in, garbage out…”)

Stop the Press

The following posting is a description of a test that I did on two streaming services where I concluded that one of them is probably doing dynamic range compression and the other one probably isn’t.

My conclusion was only partly correct. One of the players was, indeed, doing dynamic range compression. However, this was because I asked it to do so. Since posting this article, I have found an “Advanced Preferences” in the player that includes the option to make the tracks more alike in level. I had this option set to “ON” when I did this test.

So, almost everything you are about to read below is technically correct – but the conclusion that one of the services is “worse” than the other is not fair. I’ll re-do the entire test and re-post the new analysis and conclusions…

My humble apologies….

-geoff

Introduction

Tom Lehrer once said “Life is like a sewer. What you get out of it depends on what you put into it.” The same is true when you’re testing loudspeakers. What you get out of them depends on what you feed into them.

This is an important thing to keep in mind when you’re listening to loudspeakers. If listen to a pair of loudspeakers for the first time and you decide that there’s something about them that you don’t like, you should make sure that whatever it is that you don’t like about them is not the fault of your source. This might mean that there’s something in the recording that’s causing the issue (like a resonance in the vocal booth, or a clipped master, for example). It might mean that the player has some issues (computer headphone outputs are notoriously noisy, for example). It might mean that you should be careful that you’re not listening to a lower bitrate psychoacoustic CODEC like MP3, for example. Or it might mean something else that isn’t immediately obvious.

This is particularly true if you use a streaming service, of which there seem to be newcomers every day… Now, before we go any further, I’ll warn you that I’m not going to mention any names in this posting. So, don’t email me and ask “Which services were you talking about in that posting? I promise I won’t tell anyone…” There are various reasons for this – just don’t bother asking.

When you choose a streaming service, there are a couple of things to consider. One is the level of service (and therefore, possibly quality) you want to buy. Some services offer better quality for a higher price. Some services offer music streaming for free if you can live with the commercials (typically at the same audio quality as the low-price version). Different services use different CODEC’s. Some use MP3, some use Ogg Vorbis, and some claim to stream lossless signals. You can then choose different bitrates or CODEC’s at different prices.

I’m not going to get into a discussion between the pro’s and con’s of 320 kbps MP3 vs 160 kbps Ogg Vorbis, or whether anything is as “good” as lossless. Lots of people are discussing that on lots of websites. Feel free to go there to have that fight. What I want to talk about is something else: dynamic range compression.

You should beware when you choose a streaming service that some of them apply a dynamic range compression to the music, regardless of the CODEC. This not only means that the quiet tunes are louder, and the louder tunes are quieter. It also means that the quieter sections of the tunes are louder, and the louder sections are quieter. Even worse, in some cases, this my be done on a frequency-dependent basis (using something called a multi-band compressor) which changes the overall spectral balance of the tune over time, and does it differently for different tracks due to their different spectral characteristics.

Since I have no plan to point fingers, my goal here is to help you learn to point your own fingers. In other words, how can you easily find out whether your streaming service is doing more than “just” reducing the bitrate of the audio signal.

The trick to find this out is a simple matter of doing a quick back-to back comparison. I’ll describe the technique assuming that you’re comparing two streaming services using a single computer – but you could (of course) apply this technique to a streaming service vs. local file playback.

- Open up accounts on two streaming services.

- Simultaneously open a player for each service.

- Set the volume in each player to maximum.

- Set the output volume of your computer (or the master volume of the loudspeakers/headphones) to a comfortable level. (We’ll have to assume that your computer isn’t doing anything with dynamic range, which it probably is…)

- Open up the same song on both players (a suggested list is below)

- Go back and forth, playing 10 (or so) seconds on one player, stopping, then playing the same 10 seconds on the other player.

- Listen to what happens when you play the same section of the same song in the two players. (Take notes, if necessary)

- Load a very different song and repeat steps 6. and 7.

For example, open up Player “A” and Player “B” and load “Arlandria” by the Foo Fighters.

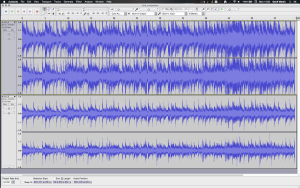

Let’s say that, when you go back and forth, the song is quieter in Player A than in Player B (see Figure 1, below, as an example…). This might be for different reasons (like the volume knobs are not aligned, for example…)

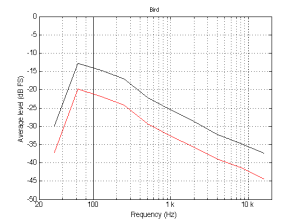

Now load “Bird on a Wire” by Jennifer Warnes and play that (which is mastered to have a much lower average (RMS) level than Arlandria – therefore it should be quieter…).

Let’s say that, when you go back and forth, the song is now louder in Player A than in Player B (as is shown in Figure 2). This means that the “volume knob” theory above doesn’t hold (if it did, Player A would always be quieter than Player B).

So, Arlandria is louder in “B” but Bird on a Wire is louder in “A”. This means that at least one (perhaps both) of the two players is doing something with the dynamic range of the tunes. What is most likely is that at least one of the players is making the Foo Fighters too quiet and Jennifer Warnes too loud (relative to their original mastering).

There might be a good reason for this. It could be that Player “A” is designed to be a radio station – good for background music where you press “play” and you never have to chase the signal with the volume control (in other words, you don’t have to suddenly drop the volume during dinner because the Foo Fighters started playing). However, there might be side effects, since it is likely that the dynamic range compression in Player A (assuming for simplicity’s sake that it is only Player A in this example that is mucking around with the levels) is happening on the signal in real-time – and is not based on a gain offset on a per-tune basis (as some software players do…)

So, as you can see, there’s not much of a trick here. It’s just a matter of doing a direct player-to-player comparison, making sure that as many components in the signal path remain constant. (For example, be careful that your computer doesn’t have a compressor on its headphone output if that’s what you’re using. Many computer headphone outputs have some kind of dynamic range stuff happening like noise gating, peak compression / soft clipping or just general envelope shaping.) Best to use a digital output from your computer to a reliable DAC that just does what it’s told, and doesn’t add any fancy effects like volume levelling.

Some analysis

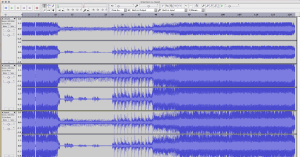

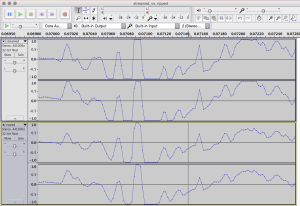

I took two streaming services (each of them delivering their “high quality” option) and routed their outputs to Max/MSP using Soundflower and recorded each as a 16-bit .wav file. Both were set to maximum volume, as was the master volume of my MacBook Pro.

I then used Audacity to manually time-align and trim the files to have exactly the same length (in samples). The length of each file was arbitrary and based more on when the chorus ended than anything else…

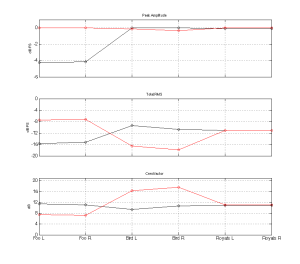

For the analyses of peak level (listed in dB FS, even though I know that dB FS is only supposed to be used for RMS measurements… sue me…), total RMS level, and crest factor, I just wrote a small routine in Matlab to look after this for me. The two values shown are for the Left and Right channels for each tune.

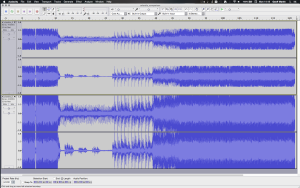

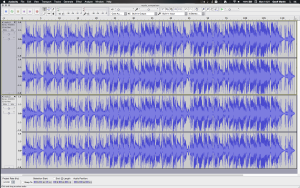

I’ve also supplied a screen shot of the entire tracks analysed, shown in Audacity, so you can get an intuitive feel of the difference between the two streaming services.

Some things to look out for here: If the difference in the peak amplitudes matches the difference in the RMS values (and therefore the crest factors match each other) then the differences in the output levels of the two streaming services is the result of a simple change in level for the entire tune – as if someone had changed the volume setting and left it alone for the whole song (this is the technique ostensibly used by at least one ubiquitous computer-based audio player). However, if the crest factors are different for the two streaming services for a given song (meaning that the differences in the peak amplitudes and the RMS values are different from service to service for a given song) then this means that some time-variant dynamic processing is going on – probably something like a compressor, a peak limiter, a soft-clipper, or just a clipper.

Foo Fighters – Arlandia (first 81.67 seconds)

[table]

Streaming Service “A”, Left, Right

Peak Amplitudes (dB FS), -4.2209, -4.1578

RMS (dB FS), -15.6585, -15.2328

Crest factor (dB), 11.4376, 11.0750

[/table]

[table]

Streaming Service “B”, Left, Right

Peak Amplitudes (dB FS), 0.0, 0.0

RMS (dB FS), -7.4978, -7.1929

Crest factor (dB), 7.4978, 7.1929

[/table]

Jennifer Warnes – Bird on a Wire (first 59.43 seconds)

[table]

Streaming Service “A”, Left, Right

Peak Amplitudes (dB FS), 0.0, 0.0

RMS (dB FS), -9.4162, -10.6752

Crest factor (dB), 9.4162, 10.6752

[/table]

[table]

Streaming Service “B”, Left, Right

Peak Amplitudes (dB FS), -0.1287, -0.3205

RMS (dB FS), -16.5152, -17.8888

Crest factor (dB), 16.3865, 17.5683

[/table]

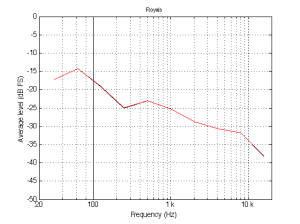

Lorde – Royals (first 73.46 seconds)

[table]

Streaming Service “A”, Left, Right

Peak Amplitudes (dB FS), -0.0863, -0.0863

RMS (dB FS), -11.0863, -11.0712

Crest factor (dB), 11.0000, 10.9849

[/table]

[table]

Streaming Service “B”, Left, Right

Peak Amplitudes (dB FS), -0.0005, -0.0005

RMS (dB FS), -11.0509, -11.0365

Crest factor (dB), 11.0504, 11.0360

[/table]

So, what confuses me at the end of this is that Royals is almost identical in both. And, before you jump to conclusions, I checked… I did not screw up the captures. When I subtract “B” from “A”, I can hear artefacts typical of a psychoacoustic CODEC, so I know that the two are different enough to be measurably different. What doesn’t make sense is that, if “A” is dynamically compressing the other two tracks more than “B” (which it appears to be), then why is it not messing around with “Royals”? Hmmmm… I guess that more investigation is necessary with respect to this little anomaly – but not today… Sorry.

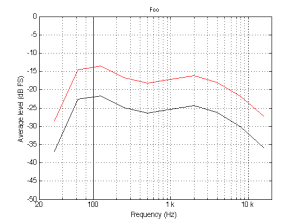

We can also check to see if the dynamic range compression that’s being used is a multi-band type or not by doing a quick spectral analysis of the excerpts. I took each of the 6 files (three songs x 2 streaming services) and did the following:

- Filtered the left channel of each track using 3rd-order butterworth highpass and lowpass filters with cutoff frequencies between the ISO octave-band centres ranging from 31.5 Hz to 16 kHz inclusive.

- Calculated the total RMS value of each of the entire band-limited versions of the tracks

- Plotted the results in dB FS

Now, before you start complaining about my methodology here, remember that I’m just using a “big paint brush” approach to checking this out. I’m just interested in seeing if there are any significant differences between the spectral balances of a single track coming from the two streaming services. The results are plotted below.

What you can see in those three plots is that the spectra of a given tune from the two services are roughly the same – only the overall levels are different (which roughly correspond to the RMS values shown in Figure 4).

In case you’re curious, if you level-align the plots in Figures 5 through 7 inclusive, you’ll see that the worst-case differences in spectra for the three tunes across the entire frequency range analysed are about 0.7 dB for Arlandria, 0.9 dB for Bird on a Wire, and 0.08 dB for Royals. However, be a little careful here, since this is an RMS value of the entire track – and may not reflect the more “instantaneous” spectral differences, say, on a clipped peak in “Bird on a Wire”, for example. I won’t bother to analyse those, since it would not be surprising for a peak compressor to generate distortion-like harmonics when it’s changing its gain. This could result in a spectral difference that looks like a multi-band compressor, but isn’t. Windowing can be distracting sometimes…

The conclusion

One moral of the story here is that knowing the psychoacoustic CODEC and bitrate used by your streaming service is not necessarily enough information to know whether or not you’re getting the audio quality you may want.

The other moral is that you shouldn’t necessarily trust that a “high quality” version of a streaming service is the same as the “high quality” version of another streaming service.

Another message here is that you should not compare one service to another one and think that you’re only comparing CODEC’s – for example, don’t listen to Deezer vs. Spotify vs. Tidal in order to find out if you can hear the difference between MP3, Ogg Vorbis, and lossless. You might hear a difference, but it might not be caused by the CODEC’s…

Finally, an important moral is: if you’re using a streaming service to test loudspeakers (for example, in a store) – make sure that the version of the tune that you’re listening to is the version that you know – from the same streaming service and the same quality level. Otherwise, the problems (or just differences) you might hear might not be the fault of the speaker… (For example, if your streaming service at home is “worse” than the store’s – it might make the speakers sound better then you think just because the source is better…) Remember garbage in = garbage out, regardless of whether the description is more applicable to the loudspeakers at the store or the ones at home.

Post-script: Comparison to CD

I thought it might be interesting to compare a CD version to the streaming versions. Unfortunately, the only disc I have of the three tracks here is the Foo Fighters. I have the original Famous Blue Raincoat album from once-upon-a-time, but I don’t have the 20th anniversary re-release, which is what the streaming services appear to be using.

[table]

Streaming Service “A”, Left, Right

Peak Amplitudes (dB FS), -4.2209, -4.1578

RMS (dB FS), -15.6585, -15.2328

Crest factor (dB), 11.4376, 11.0750

[/table]

[table]

Streaming Service “B”, Left, Right

Peak Amplitudes (dB FS), 0.0, 0.0

RMS (dB FS), -7.4978, -7.1929

Crest factor (dB), 7.4978, 7.1929

[/table]

[table]

Ripped from CD, Left, Right

Peak Amplitudes (dB FS), -0.0003, -0.0003

RMS (dB FS), -10.7888, -10.3700

Crest factor (dB), 10.7885, 10.3697

[/table]

So, the data shows that, at least for this tune, the version ripped from a CD is different again from the two streaming services. This can also be seen if we put the three versions side-by-side on a DAW.

As can be seen below, this means that some samples are clipped in the process in the streaming service that are not clipped in the original…

Acoustic Levitation

An interesting listening test…

What’s really interesting is to repeat the test on different equipment such as headphones and loudspeakers, or different DAC’s – or even different listening levels.

(The questions are randomised each time you re-load the page – but of course there’s a learning effect that might influence your results)

Le Studio decays

Speaking of Vinyl…

Nashville studios

Build your own wave machine