Thanks to Claudia Haase and Thomas Lischker at RTW Radio–Technische (www.rtw.de) for their kind permission to use graphics from their product line for this section.

When you sit down to do a recording – any recording, you have two basic objectives:

1) make the recording sound nice aesthetically

2) make sure that the technical quality of the recording is high.

Different people and record labels will place their priorities differently (I’m not going to mention any names here, but you know who you are...)

One of the easiest ways to guarantee a high technical quality is to pay particular attention to your gain and levels at various points in the recording chain. This sentence is true not only for the signal as it passes out and into various pieces of equipment (i.e. from a mixer output to a tape recorder input), but also as it passes through various stages within one piece of equipment (in particular, the signal level as it passes through a mixer). The question is: “what’s the best level for the signal at this point in the recording chain?”

There are two beasts hidden in your equipment that you are constantly trying to avoid and conceal as you do your recording. On a very general level, these are noise and distortion.

Noise can be generally defined as any audio in the signal that you don’t want there. If we restrict ourselves to electrical noise in recording equipment, then we’re basically talking about hiss and hum. The reasons for this noise and how to reduce it are discussed in a different chapter, however, the one inescapable fact is that noise cannot be avoided. It can be reduced, but never eliminated. If you turn on any piece of audio equipment, or any component within any piece of equipment, you get noise. Normally, because the noise stays at a relatively constant level over a long period of time and because we don’t bother recording signals lower in level than the noise, we call it a noise floor.

How do we deal with this problem? The answer is actually quite simple: we turn up the level of the signal so that it’s much louder than the noise. We then rely on psychoacoustic masking (and, if we’re really lucky, the threshold of hearing) to cover up the fact that the noise is there. We don’t eliminate the noise, we just hide it – and the louder we can make the signal, the better it’s hidden. This works great, except that we can’t keep increasing the level of the signal because at some point, we start to distort it.

If the recording system was absolutely perfect, then the signal at its output would be identical to the signal at the input of the microphone. Of course, this isn’t possible. Even if we ignore the noise floor, the signals at the two ends of the system are not identical – the system itself modifies or distorts the signal a little bit. The less the modification, the lower the distortion of the signal and the better it sounds.

Keep in mind that the term “distortion” is extremely general – different pieces of equipment and different systems will have different detrimental effects on different signals. There are different ways of measuring this, but we typically look at the amount of distortion in percent. This is a measurement of how much extra power is included in the signal that shouldn’t be there. The higher the percentage, the more distortion and the worse the signal. (See the chapter on distortion measurements in the Electroacoustic Measurements section.)

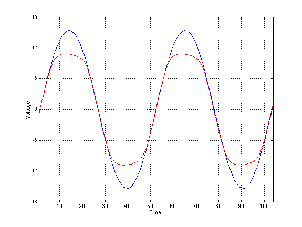

There are two basic causes of distortion in any given piece of equipment. The first is the normal day–to–day error of the equipment in transmitting or recording the signal. No piece of gear is perfect, and the error that’s added to the signal at the output is basically always there. The second, however, is a distortion of the signal caused by the fact that the level of the signal is too high. The output of every piece of equipment has a maximum voltage level that cannot be exceeded. If the level of the signal is set so high that it should be greater than the maximum output, then the signal is clipped at the maximum voltage as is shown in Figure 10.2.

For our purposes at this point in the discussion, I’m going to over–simplify the situation a bit and jump to a hasty conclusion. Distortion can be classified as a process that generates unwanted signals that are added to our program material. In fact, this is exactly what happens – but the unwanted signals are almost always harmonically related to the signal whereas your run–of–the–mill noise floor is completely unrelated harmonically to the signal. Therefore, we can group distortion with noise under the heading “stuff we don’t want to hear” and look at the level of that material as compared to the level of the program material we’re recording – in other words the “stuff we do want to hear.” This is a small part of the reason that you’ll usually see a measurement called “THD+N” which stands for “Total Harmonic Distortion plus Noise” – the stuff we don’t want to hear.

So, we need to make the signal loud enough to mask the noise floor, but quiet enough so that it doesn’t distort, thus maximizing the level of the signal compared to the level of the distortion and noise components. How do we do that? And, more importantly, how do we keep the signal at an optimal level so that we have the highest level of technical quality? In order to answer this question, we have to know the exact behaviour of the particular piece of gear that we’re using – but we can make some general rules that apply for groups of gear. These three groups are 1) digital gear, 2) analog electronics and 3) analog tape.

As we’ve seen in previous chapters, digital gear has relatively easily defined extremes for the audio signal. The noise floor is set by the level of the dither, typically with a level of one half of an LSB. The signal to noise ratio of the digital system is dependent on the number of bits that are used for the signal – increasing by 6.02 dB per bit used. Since the level of the dither is typically half a bit in amplitude, we subtract 3 dB from our signal to noise ratio calculated from the number of bits. For example, if we are recording a sine wave that is using 12 of the 16 bits on a CD and we make the usual assumptions about the dither level, then the signal to noise ratio for that particular sine wave is:

(12 bits * 6 dB per bit) – 3 dB

= 69 dB

Therefore, in the above example, we can say that the noise floor is 69 dB below the signal level. The more bits we use for the signal (and therefore the higher its peak level) the greater the signal to noise ratio and therefore the better the technical quality of the recording. (Do not confuse the signal to noise ratio with the dynamic range of the system. The former is the ratio between the signal and the noise floor. The latter is the ratio between the maximum possible signal and the noise floor – as we’ll see, this raises the question of how to define the maximum possible level...)

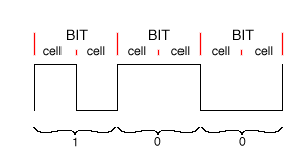

We also know from previous chapters that digital systems have a very unforgiving maximum level. If you have a 16 bit system, then the peak level of the signal can only go to the maximum level of the system defined by those 16 bits. There is some debate regarding what you can get away with when you hit that wall – some people say that 2 consecutive samples at the maximum level constitutes a clipped signal. Others are more lenient and accept one or two more consecutively clipped samples. Ignoring this debate, we can all agree that, once the peak of a sine wave has reached the maximum allowable level in a digital system, any increase in level results in a very rapid increase in distortion. If the system is perfectly aligned, then the sine wave starts to approach a square wave very quickly (ignoring a very small asymmetry caused by the fact that there is one extra LSB for the negative–going portion of the wave than there is for the positive side in a PCM system). See Figure 10.2 to see a sample input and output waveform. The “consecutively clipped samples” that we’re talking about is a measurement of how long the flattened part of the waveform stays flat.

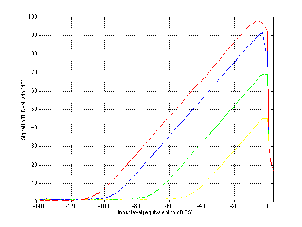

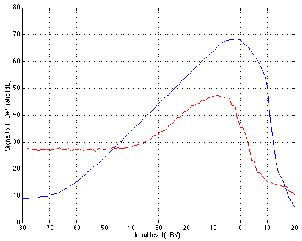

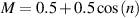

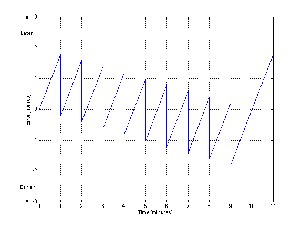

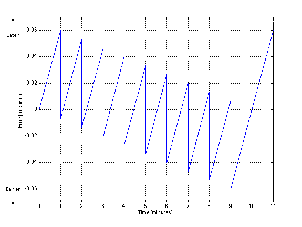

If we were to draw a graph of this behaviour, we would result in the plot shown in Figure 10.3. Notice that we’re looking at the Signal to THD+N ratio vs. the level of the signal.

The interesting thing about this graph is that it’s essentially a graph of the peak signal level vs. audio quality (at least technically speaking... we’re not talking about the quality of your mix or the ability of your performers...). We can consider that the X–axis is the peak signal level in dB FS and the Y–axis is a measurement of the quality of the signal. Consequently, we can see that the closer we can get the peak of the signal to 0 dB FS the better the quality, but if we try to increase the level beyond that, we get very bad very quickly.

Therefore, the general moral of the story here is that you should set your levels so that the highest peak in the signal for the recording will hit as close to 0 dB FS as you can get without going over it. In fact, there are some problems with this – you may actually wind up with a signal that’s greater than 0 dB FS by recording a signal that’s less than 0 dB FS in some situations... but we’ll look at that later... this is still the introduction.

Analog electronics (well, operational amplifiers really... but pretty well everything these days is built with op amps, so we’ll stick with the generalized assumption for now...) have pretty much the same distortion characteristics as digital, but with a lower noise floor (unless you have a very high resolution digital system or a really crappy analog system). As can be seen in Figure 10.4, the general curve for an analog microphone preamplifier, mixing console, or equalizer (note that we’re not talking about dynamic range controllers like compressors, limiters, expanders and gates) looks the same as the curve for digital gear shown in Figure 10.3. If you’ve got a decent piece of analog gear (even something as cheap as a Mackie mixer these days) then you should be able to hit a maximum signal to noise ratio of about 125 dB or so when the signal is at some maximum level where the peak level is bordering on the clipping level (somewhere around +/- 13.5 V or +/- 16.5 V, depending on the power supply rails and the op amps used). Any signal that goes beyond that peak level causes the op amps to start clipping and the distortion goes up rapidly (and bringing the quality level down quickly).

So, the moral of the story here is the same as in the digital world. As a general rule, it’s good for analog electronics to keep your signal as high as possible without hitting the maximum output level and therefore clipping your signal.

One minor problem in the analog electronics world is knowing exactly what level causes your gear to distort. Typically, you can’t trust your meters as we’ll see later, so you’ll either have to come up with an alternate metering method (either using an oscilloscope, an external meter, or one of your other pieces of gear as the meter) or just keep your levels slightly lower than optimal to ensure that you don’t hit any brick walls.

One nice trick that you can use is in the specific case where you’re coming from an analog microphone preamplifier or analog console into a digital converter (depending on its meters). In this case, you can pre–set the gain at the input stage of the mic pre such that the level that causes the output stage of the mixer to clip is also the level that causes the input stage of the ADC to clip. In this case, the meters on your converter can be used instead of the output meters on your microphone preamplifier or console. If all the gear clips at the same level and your stay just below that level at the recording’s peak, then you’ve done a good job. The nice thing about this setup is that you only need to worry about one meter for the whole system.

Analog tape is a different kettle of fish. The noise floor in this case is the same as in the analog and digital worlds. There is some absolute noise floor that is inherent on the tape (the reasons for which are discussed in the chapter on analog tape, oddly enough...) but the distortion characteristics are different.

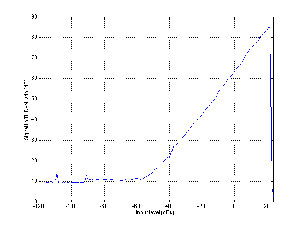

When the signal level recorded on an analog tape is gradually increased from a low level, we see an increase in the signal to noise ratio because the noise floor stays put and the signal comes up above it. At the same time however, the level of distortion gradually increases. This is substantially different from the situation with digital signals or op amps because the clipping isn’t immediate – it’s a far more gradual process as can be seen in Figure 10.5.

The result of this softer, more gradual clipping of the waveform is twofold. Firstly, as was mentioned above, the increase in distortion is more gradual as the level is increase. In addition, because the change in the slope of the waveform is less abrupt, there are fewer very high frequency components resulting from the distortion. Consequently, there are a large number of people who actually use this distortion as an integral part of their processing. This tape compression as it is commonly known, is most frequently used for tracking drums.

Assuming that we are trying to maintain the highest possible technical quality and assuming that this does not include tape compression, then we are trying to keep the signal level at the high point on the graph in Figure 5. This level of 0 dB VU is a so–called nominal level at which it has been decided (by the tape recorder manufacturer, the analog tape supplier and the technician that works in your studio) that the signal quality is best. Your goal in this case is to keep the average level of the signal for the recording hovering around the 0 dB VU mark. You may go above or below this on peaks and dips – but most of the time, the signal will be at an optimal level.

Notice that there are two fundamentally different ways of thinking presented above. In the case of digital gear or analog electronics, you’re determining your recording level based on the absolute maximum peak for the entire recording. So, if you’re recording an entire symphony, you find out what the loudest part will be and make that point in the recording as close to maximum as possible. Look after the peak and the rest will look after itself. In contrast, in the case of analog tape, we’re not thinking of the peak of the signal, we’re concentrating on the average level of the signal – the peaks will look after themselves.

So, now that we’ve got a very basic idea of the objective, how do we make sure that the levels in our recording system are optimized? We use the meters on the gear to give us a visual indication of the levels. The only problem with this statement is that it assumes that the meter is either telling you what you want to know, or that you know how to read the meter. This isn’t necessarily as dumb as it sounds.

A discussion of meters can be divided into two subtopics. The first is the issue of scale – what actual signal level corresponds to what indication on the meter. The second is the issue of ballistics – how the meter responds in time to changes in level.

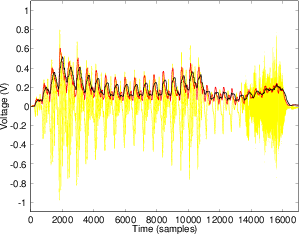

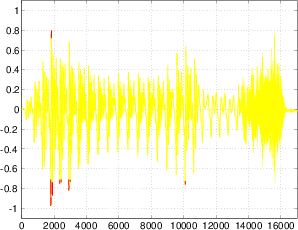

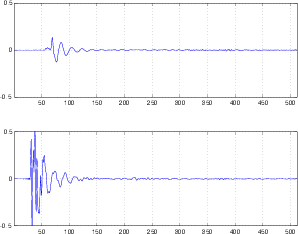

Before we begin, we’ll take a quick review of the difference between the peak and the RMS value of a signal. Figure 10.7 shows a portion of a recorded sound wave. In fact, it’s an excerpt of a recording of male speech.

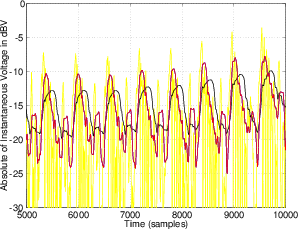

One simple measurement of the signal level is to continuously look at its absolute value. This is simply done by taking the absolute value of the signal shown in Figure 10.8.

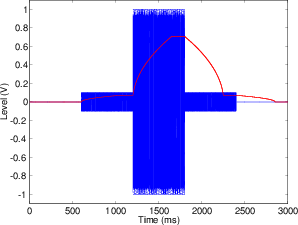

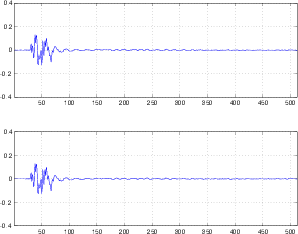

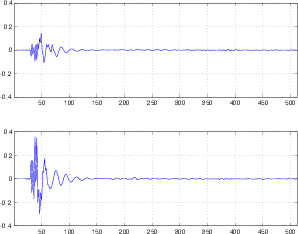

A second, more complex method is to use the running RMS of the signal. As we’ve already discussed, the relationship between the RMS and the instantaneous voltage is dependent on the time constant of the RMS detection circuit. Notice in Figure 10.9 that not only do the highest levels in the RMS signals differ (the shorter the time constant, the higher the level) but their attack and decay slopes differ as well.

A level meter tells you the level of the signal – either the peak or the RMS value of the level depending on the meter – on a relative scale. We’ll look at these one at a time, and deal with the respective scale and ballistics for each.

If you look at a microphone preamplifier or the input module of a mixing console, you’ll probably see a red LED. This peak light is designed to light up as a warning signal when the peak level (the instantaneous voltage – or more likely the absolute value of the instantaneous voltage) approaches the voltage where the components inside the equipment will start to clip. More often than not, this level is approximately 3 dB below the clipping level. Therefore, if the device clips at +/– 16.5 V then the peak light will come on if the signal hits 11.6673 V or –11.6673 V (3 dB less than 16.5 V or 16.5 V / sqrt(2)). Remember that the level at which the peak indicator lights is dependent on the clip level of the device in question – unlike many other meters, it does not indicate an absolute signal strength. So, without knowing the exact characterstics of the equipment, we cannot know what the exact level of the signal is when the LED lights. Of course, the moral of that issue is “know your equipment.”

For example, take a look at Figure 10.13. Let’s assume that the signal is passing through a piece of equipment that clips at a maximum voltage of 10 V. The peak indicator will more than likely light up when the signal is 3 dB below this level. Therefore any signal greater than 7.07 V or less than –7.07 V will cause the LED to light up.

Note that a peak indicator is an instantaneous measurement. If all is working properly, then any signal of any duration (no matter how short) will cause the indicator to light if the signal strength is high enough.

Also note that the peak indicator lights when the signal level is slightly lower than the level where clipping starts, so just because the light lights doesn’t mean that you’ve clipped your signal... but you’re really close.

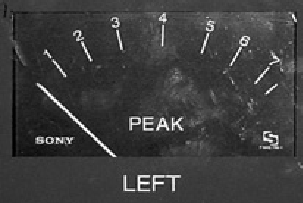

The Volume Unit Meter (better known as a VU Meter) shows what is theoretically an RMS level reading of the signal passing through it. Its display is calibrated in decibels that range from –20 dB VU up to +3 dB VU (the range of 0 dB VU to +3 dB VU are marked in red). Because the VU meter was used primarily for recording to analog tape, the goal was to maintain the RMS of the signal at the “optimal” level on the tape. As a result, VU meters are centered around 0 dB VU – a nominal level that is calibrated by the manufacturer and the studio technician to match the optimal level on the tape.

In the case of an analog tape recorder, we can monitor the signal that is recorded to the tape or the signal coming off the tape. Either way, the meter should be showing us an indication of the amount of magnetic flux on the medium. Depending on the program material being recorded, the policy of the studio, and the tape being used, the level corresponding to 0 dB VU will be something like 250 nWb/m or so. So, assuming that the recorder is calibrated for 250 nWb/m, then when the VU Meter reads 0 dB VU, the signal strength on the tape is 250 nWb per meter. (If the term “nWb/m” is unfamiliar, of if you’re unsure how to decide what your optimal level should be, check out the chapter on analog tape.)

In the case of other equipment with a VU Meter (a mixing console, for example), the indicated level on the meter corresponds to an electrical signal level, not a magnetic flux level. In this case, in almost all professional recording equipment, 0 dB VU corresponds to +4 dBu or, in the case of a sine tone that’s been on for a while, 1.228VRMS. So, if all is calibrated correctly, if a 1 kHz sine tone is passed through a mixing console and the output VU meters on the console read 0 dB VU, then the sine tone should have a level of 1.228VRMS between pins 2 and 3 on the XLR output. Either pin 2 or 3 to ground (pin 1) will be half of that value.

In addition to the decibel scale on a VU Meter, it is standard to have a second scale indicated in percentage of 0 dB VU where 0 dB VU = 100%. VU Meters are subdivided into two types – the Type A scale has the decibel scale on the top and the 0% to 100% in smaller type on the bottom as is shown in Figure 10.14. The Type B scale has the 0% to 100% scale on the top with the decibel equivalents in smaller type on the bottom.

If you want to get really technical, the offical definition of the VU Meter specifies that it reads 0 dB VU when it is bridging a 600 Ω line and the signal level is +4 dBm.

Since VU Meters are essentially RMS meters, we have to remember that they do not respond to instantaneous changes in the signal level. The ballistics for VU Meters have a carefully defined rise and decay time – meaning that we know how fast they respond to a sudden attack or a sudden decay in the sound – slowly. These ballistics are defined using a sine tone that is suddenly switched on and off. If there is no signal in the system and a sine tone is suddenly applied to the VU Meter, then the indicator (either a needle or a light) will reach 99% of the actual RMS level of the signal in 300 ms. In technical terms, the indicator will reach 99% of full–scale deflection in 300 ms. Similarly, when the sine tone is turned off and the signal drops to 0 V instantaneously, the VU meter should take 300 ms to drop back 99% of the way (because the meter only sees the lack of signal as a new signal level, therefore it gets 99% of the way there in 300 ms – no matter where it’s going).

Also, there is a provision in the definition of a VU Meter’s ballistics for something called overshoot. When the signal is suddenly applied to the meter, the indicator jumps up to the level it’s trying to display, but it typically goes slightly over that level and then drops back to the correct level. That amount of overshoot is supposed to be no more than 1.5% of the actual signal level. (If you’re picky, you’ll notice that there is no overshoot plotted in Figures 10.15 and 10.16.)

The good thing about VU Meters is that they show you the average level of the signal – so they’re great for recording to analog tape or for mastering purposes where you want to know the overall general level of the signal. However, they’re very bad at telling you the peak level of the signal – in fact, the higher the crest factor, the worse they are at telling you what’s going on. As we’ve already seen, there are many applications where we need to know exactly what the peak level of the signal is. Once upon a time, the only place where this was necessary was in broadcasting – because if you overload a transmitter, bad things happen. So, the people in the broadcasting world didn’t have much use for the VU Meter – they needed to see the peak of the program material, so the Peak Program Meter or PPM was developed in Europe around the same time as the VU Meter was in development in the US.

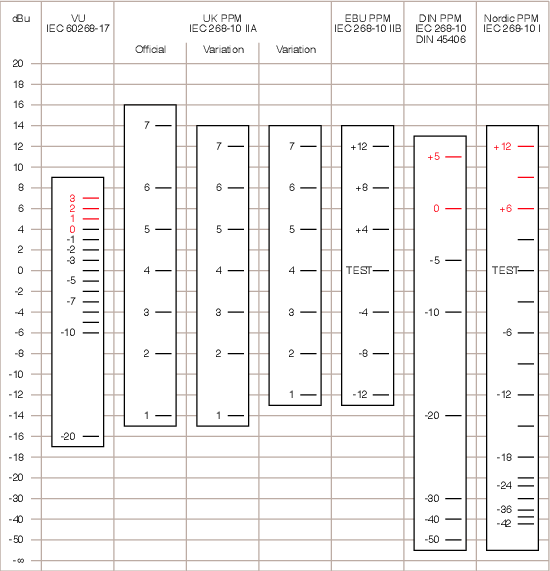

A PPM is substantially different from a VU Meter in many respects. These days it has many different incarnations – particularly in its scale, but the traditional one that most people think of is the UK PPM (also known as the BBC PPM). We’ll start there.

The UK PPM looks very different from a VU Meter – it has no decibel markings on it – just numbered indications from “Mark 0” up to “Mark 7.” In fact, the PPM is divided in decibels, they just aren’t marked there – generally, there are 4 decibels between adjacent marks – so from Mark 2 to Mark 3 is an increase of 4 dB. There are two exceptions to this rule – there are 6 decibels between Marks 0 and 1 (but note that Mark 0 is not marked). In addition, there are 2 decibels between Mark 7 and Mark 8 (which is also not marked).

Because we’re thinking now in terms of the peak signal level, the nominal level is less important than the maximum, however, PPM’s are calibrated so that Mark 4 corresponds to 0 dBu. Therefore if the PPM at the output stage on a mixing console read Mark 5 for a 1 kHz sine wave, then the output level is 1.228VRMS between pins 2 and 3 (because Mark 5 is 4 dB higher than Mark 4, making it +4 dBu).

There are a number of other PPM Scales available to the buying public. In addition to the UK PPM, there’s the EBU PPM, the DIN PPM and the Nordic PPM. Each of these has a different scale as is shown in Table 10.1 and the corresponding Figure 10.19.

| Meter | Standard | Minimum Scale and | Maximum Scale and | Nominal Scale and |

| Corresponding Level | Corresponding Level | Corresponding Level | ||

| VU | IEC 60268–17 | –20 dB = –16 dBu | +3 dB = 7 dBu | 0 dB = 4 dBu |

| UK (BBC) PPM | IEC 268–10 IIA | Mark 1 = –14 dBu | Mark 7 = 12 dBu | Mark 4 = 0 dBu |

| EBU PPM | IEC 268–10 IIB | –12 dB = –12 dBu | +12 dB = 12 dBu | Test = 0 dBu |

| DIN PPM | IEC 268–10 / DIN 45406 | –50 dB = –44 dBu | +5 dB = 11 dBu | 0 dB = 6 dBu |

| Nordic PPM | IEC 268–10 I | –42 dB = –42 dBu | +12 dB = 12 dBu | Test (or 0 dB) = 0 dBu |

Let’s be complete control freaks and build the perfect PPM. It would show the exact absolute value of the voltage level of the signal all the time. The needle would dance up and down constantly and after about 3 seconds you’d have a terrible headache watching it. So, this is not the way to build a PPM. In fact, what is done is the ballistics are modified slightly so that the meter responds very quickly to a sudden increase in level, but it responds very slowly to a sudden drop in level – the decay time is much slower even than a VU Meter. You may notice that the PPM’s listed in Table 1 and Figure 10.19 are grouped into two “types” Type I and Type II. These types indicate the characteristics of the ballistics of the particular meter.

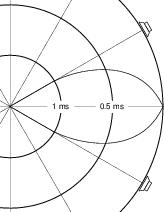

The attack time of a Type I PPM is defined using an integration time of 5 ms – which corresponds to a time constant of 1.7 ms. Therefore, a tone burst that is 10 ms long will result in the indicator being 1 dB lower than the correct level. If the burst is 5 ms long, the indicator will be 2 dB down, a 3 ms burst will result in an indicator that is 4 dB down. The shorter the burst, the more inaccurate the reading. (Note however, that this is significantly faster than the VU Meter.)

Again, unlike the VU meter, the decay time of a Type I PPM is not the reciprocal of the attack curve. This is defined by how quickly the indicator drops – in this case, the indicator will drop 20 dB in 1.4 to 2.0 seconds.

The attack time of a Type II PPM is identical to a Type I PPM.

The decay of a Type II PPM is somewhat different from its Type I cousin. The indicator falls back at a rate of 24 dB in 2.5 to 3.1 seconds. In addition, there is a “hold” function on the peak where the indicator is held for 75 ms to 150 ms before it starts to decay.

There’s one important thing to note in all of this discussion. This chapter assumes that we’re talking about professional equipment in a recording studio.

If you work with professional broadcast equipment, then the nominal level is different – in fact, it’s 4 dB higher than in a recording studio. 0 dB VU corresponds to +8 dBu and all of the other scales are higher to match.

If we’re talking about consumer–level equipment, either for recording or just for listening to things at home on your stereo, then the nominal 0 dB VU point (and all other nominal levels) corresponds to a level of -10 dBV or 0.316VRMS.

A digital meter is very similar to a PPM because, as we’ve already established, your biggest concern with digital audio is that the peak of the signal is never clipped. Therefore, we’re most interested in the peak or the amplitude of the signal.

As we’ve said before, the noise floor in a PCM digital audio signal is typically determined by the dither level which is usually at approximately one half of an LSB. The maximum digital level we can encode in a PCM digital signal is determined by the number of bits. If we’re assuming that we’re talking about a two’s complement system, then the maximum positive amplitude is a level that is expressed as a 0 followed by as many 1’s as are allowed in the digital word. For example, in an 8–bit system, the maximum possible positive level (in binary) is 01111111. Therefore, in a 16–bit system with 65536 possible quantization values, the maximum possible positive level is level number 32767. In a 24–bit system, the maximum positive level is 8388607. (If you’d like to do the calculation for this, it’s 2(n-1) -1 where n is the number of bits in the digital word.

Note that the negative–going signal has one extra LSB in a two’s complement system as is discussed in the chapter on digital conversion.

The maximum possible value in the positive direction in a PCM digital signal is called full scale because a sample that has that maximum value uses the entire scale that is possible to express with the digital word. (Note that we’ll see later that this definition is actually a lie – there are a couple of other things to discuss here, but we’ll get back to them in a minute.)

It is therefore evident that, in the digital world, there is some absolute maximum value that can be expressed, above which there is no way to describe the sample value. We therefore say that any sample that hits this maximum is “clipped” in the digital domain – however, this does not necessarily mean that we’ve clipped the audio signal itself. For example, it is highly unlikely that a single clipped sample in a digital audio signal will result in an audible distortion. In fact, it’s unlikely that two consecutively clipped samples will cause audible artifacts. The more consecutively clipped samples we have, the more audible the distortion. People tend to settle on 2 or 3 as a good number to use as a definition of a “clipped” signal.

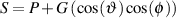

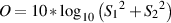

If we look at a rectified signal in a two’s complement PCM digital domain, then the amplitude of a sample can be expressed using its relationship to a sample at full scale. This level is called dB FS or “decibels relative to full scale” and can be calculated using the following equation:

dB FS = 20 * log (sample value / maximum possible value)

Therefore, in a 16–bit system, a sine wave that has an amplitude of 16384 (which is also the value of the sample at the positive peak of the sine wave) will have a level of –6.02 dB FS because:

20 * log (16384 / 32767) = –6.02 dB FS

There’s just one small catch: I lied. There’s one additional piece of information that I’ve omitted to keep things simple. Take a close look at Figure 10.22. The way I made this plot was to create a sine wave and quantize it using a 4–bit system assuming that the sampling rate is 20 times the frequency of the sine wave itself. Although this works, you’ll notice that there are some quantization levels that are not used. For example, not one of the samples in the digital sine wave representation has a value of 0001, 0011 or 0101. This is because the frequency of the sine wave is harmonically related to the sampling rate. In order to ensure that more quantization levels are used, we have to use a sampling rate that is enharmonically related to the sampling rate. The technical definition of “full scale” uses a digitally–generated sine tone that has a frequency of 997 Hz. Why 997 Hz? Well, if you divide any of the standard sampling rates (32 kHz, 44.1 kHz, 48 kHz, 88.2 kHz, 96 kHz, etc...) by 997, you get a nasty number. The result is that you get a different quantization value for every sample in a second. You won’t hit every quantization value because the whole system starts repeating after one second – but, if your sine tone is 997 Hz and your sampling rate is 44.1 kHz, you’ll wind up hitting 44100 different quantization values. The higher the sampling rate, the more quantization values you’ll hit, and the less your error from full scale.

The other reason for using this system is to avoid signals that are actually higher than Full Scale without the system actually knowing. If you have a sine tone with a frequency that is harmonically related to the sampling rate, then it’s possible that the very peak of the wave is between two samples, and that it will always be between two samples. Therefore the signal is actually greater than 0 dB FS without you ever knowing it. With a 997 Hz tone, eventually, the peak of the wave will occur as close as is reasonably possible to the maximum recordable level.

This becomes part of the definition of full scale – the amplitude of a signal is compared to the amplitude of a 997 Hz sine tone at full scale. That way we’re sure that we’re getting as close as we can to that top quantization level.

There is one other issue to deal with: the definition of dB FS uses the RMS value of the signal. Therefore, a signal that is at 0 dB FS has the same RMS value as a 997 Hz sine wave whose peak positive amplitude reaches full scale. There are two main implications of this definition. The first has to do with the crest factor of your signal. Remember that the crest factor is a measurement of the relationship between the peak and the RMS value of the signal. In almost all cases, the peak value will be greater than RMS value (in fact, the only time this is not the case is a square wave in which they will be equal). Therefore, if a meter is really showing you the signal strength in dB FS, then it is possible that you are clipping your signal without your meter knowing. This is because the meter would be showing you the RMS level, but the peak level is much higher. It is therefore possible that you are clipping that peak without hitting 0 dB FS. This is why digital equipment also has an OVER indicator (check out Figure 10.23) to tell you that the signal has clipped. Just remember that you don’t necessarily have to go all the way up to 0 dB FS to clip.

Another odd implication of the dB FS definition is that, in the odd case of a square wave, you can have a level that is greater than 0 dB FS without clipping. The crest factor of a sine wave is 3.01 dB. This means that the RMS level of the sine tone is 3.01 dB less than its peak value. By comparison, the crest factor of a square wave is 0 dB, meaning that the peak and RMS values are equal. So what? Well, since dB FS is referenced to the RMS value of a sine wave whose maximum peak is at Full Scale (and therefore 3.01 dB less than Full Scale), if you put in a square wave that goes all the way up to Full Scale, it will have a level that is 3.01 dB higher than the Full Scale sine tone, and therefore a level of +3.01 dB FS. This is an odd thing for people who work a lot with digital gear. I, personally, have never seen a digital meter that goes beyond 0 dB. Then again, I don’t record square waves very often either, so it doesn’t really matter a great deal.

Chances are that the digital meter on whatever piece of equipment that you own really isn’t telling you the signal strength in dB FS. It’s more likely that the level shown is a sample–by–sample level measurement (and therefore not an RMS measurement) with a ballistic that makes the meter look like it’s decaying slowly. Therefore, in such a system, 0 dB on the meter means that the sample is at Full Scale.

I’m in the process of making a series of test tones so that you can check your meters to see how they display various signal levels. Stay tuned!

As far as I’ve been able to tell, there are no standards for digital meter ballistics or appearances, so I’ll just describe a typical digital meter. Most of these use what is known as a dot bar mode which actually shows two levels simultaneously. Looking at Figure 10.23, we can see that the meter shows a bar that extends to –24 dB. This bar shows the present level of the signal using ballistics that typically have roughly the same visual characteristics as a VU Meter. Simultaneously, there is a dot at the –8 dB mark. This indicates that the most recent peak hit –8 dB. This dot will be erased after approximately one second or so and be replaced by a new peak unless the signal peaks at a value greater than –8 dB in which case that value will be displayed by the dot. This is similar to a Type II PPM ballistic with the decay being replaced with simple erasure.

Many digital audio meters also include a function that gives a very accurate measurement of the maximum peak that has been hit since we’ve started recording (or playing). This value is usually called the margin and is typically displayed as a numerical value near the meter, but elsewhere on the display.

Finally, digital meters have a warning symbol to indicate that the signal has clipped. This warning is simply called over since all we’re concerned with is that the signal went over full scale – we don’t care how far over full scale it went. The problem here is that different meters use different definitions for the word “over.” As I’ve already pointed out, some meters keep track of the number of consecutive samples at full scale and point out when that number hits 2 or 3 (this is either defined by the manufacturer or by the user, depending on the equipment and model number – check your manual). On some equipment (particularly older gear), the “digital” meter is driven by the analog conversion of the signal and is therefore extremely inaccurate – again, check your manual. An important thing to note about these meters is that they rarely are aware that the signal has gone over full scale when you’re playing back a digital signal, or if you’re using an external analog to digital to convertor – so be very careful.

From the time the sound arrives at the diaphragm of your microphone to the time the signal gets recorded, it has to travel a very perilous journey, usually through a lot of wire and components that degrade the quality of the signal every step of the way. One of the best ways to minimize this degradation is to ensure that you have an optimal gain structure throughout your recording chain, taking into account the noise and distortion characteristics of each component in the signal path. This sounds like a monumental task, but it really hinges on a couple of very simple concepts.

The first basic rule (that you’ll frequently have to break but you’d better have a good reason...) is that you should make the signal as loud as you can as soon as you can. For example, consider the example of a microphone connected through a mic preamp into a DAT machine. We know that, in order to get the best quality digital conversion of the signal, its maximum should be just under 0 dB FS. Let’s say that, for the particular microphone and program material, you’ll need 40 dB of gain to get the signal up to that level at the DAT machine. You could apply that gain at the mic preamp or the analog input of the DAT recorder. Which is better? If possible, it’s best to get all of the gain at the mic preamp. Why? Consider that each piece of equipment adds noise to the signal. Therefore, if we add the gain after the mic preamp, then we’re applying that gain to the signal and the noise of the microphone preamp. If we add the gain at the input stage of the mic preamp, then its inherent noise is not amplified. For example, consider the following equations:

| (signal + noise) * gain | (signal * gain) + noise | |

| (signal * gain) + (noise * gain) | But: | |

| High signal level, high noise level | High signal level, low noise level | |

NOT WRITTEN YET

Before we talk about the issue of how to setup a playback system, we have to discuss the issue of standard operating levels. We have already seen that our ears have different sensitivities to different frequencies at different levels. Basically, at low listening levels, we can’t hear low-end material as easily as mid-band content. The louder the signal gets, the “flatter” the frequency response of our ears. In the practical world, this means that if I do a mix at a low level, then I’ll mix the bass a little hot because I can’t hear it. If I turn it up, it will sound like there’s more bass in the mix, because my ears have a different response.

Therefore, in order to ensure that you (the listener) hear what I (the mixing engineer) hear, one of the first things I have to specify is how loud you should turn it up. This is the reason for a standard operating level. That way, if you say “there’s not enough bass” I can say “you’re listening at too low a level” – unless you aren’t, then we have to talk about issues of taste. This subject will not be addressed in this book.

The film and television industries have an advantage that the music people don’t. They have international standards for operating levels. What this means is that a standard operating level on the recording medium and in the equipment will result in a standard acoustic level at the listening position in the mixing studio or theatre. Of course, we typically listen to the television at lower levels than we hear at the movie theatre, so these two levels are different.

Tables 10.3 shows the standard operating levels for film and television sound work. It also includes an approximate range for music mixing, although this is not a standard level.

| Medium | Signal level | Signal level | Signal level | Acoustic level |

| Film | -20 dB FS | 0 dB VU | +4 dBu | 85 dBspl |

| Television | -20 dB FS | 0 dB VU | +4 dBu | 79 dBspl |

| Music | -20 dB FS | 0 dB VU | +4 dBu | 79 - 82 dBspl |

It is important to note that the values in Table 10.3 are for a single loudspeaker measured with an SPL meter with a C-weighting and a slow response. So, for example, if you’re working in 5.1 surround for film, pink noise at a level of 0 dB VU sent to the centre channel only will result in a level of 85 dBspl at the mixing position. The same should be true of any other main channel.

Dolby has a slightly different recommendation in that, for film work, they suggest that each surround channel (which may be sent to multiple loudspeakers) should produce a standard level of 82 dBspl. This difference is applicable only to “film-style mixing rooms” [Dolby, 2000].

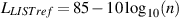

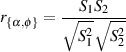

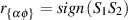

Furthermore, the EBU has a slightly different recommendation. In Technical Document 3276 [?][?], they specify that the “Alignment Signal Level” should be a pink noise signal with an RMS value of -18 dBFS on each channel. That signal should produce an acoustic level that is determined by the number of channels you have using Equation 10.1.

| (10.1) |

Where LLISTref is the reference listening level in dB(A) (using an RMS, slow) at the listening position for each channel, each produced by a single loudspeaker, and where n is the number of channels. Therefore, for 2 channels, the level for each channel should be 82 dB(A). For a 5-channel configuration, the level for each channel should be 78 dB(A).

Before we go any further, we have to look at a commonly-confused issue in monitoring, particularly since the popularization of so-called 5.1 systems.

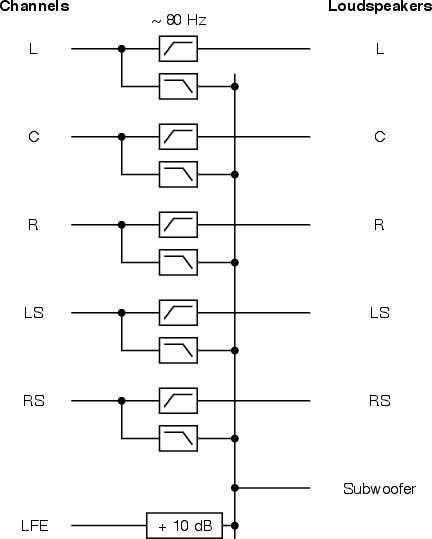

In a 5.1-channel mix, we have 5 main full-range channels, Left, Centre, Right, Left Surround and Right Surround. In addition, there is a channel which is band-limited from 0 to 120 Hz called the LFE or Low Frequency Effects channel.

In the listening room in the real world, we have a number of loudspeakers:

Of course, the situation I just described for the listening environment is not the optimal situation, but it’s a reasonable description of the real world. We’ll look at the ideal situation below.

If we look at a very simple configuration, then the L, R, C, LS and RS signals are connected directly to the L, R, C, LS and RS loudspeakers respectively, and the LFE channel is connected to the subwoofer. In most cases, however, this is not the only configuration. In larger listening rooms, we typically see more than two surround loudspeakers and more than one subwoofer. In smaller systems, people have been told that they don’t need 5 large speakers, because all the bass can be produced by the subwoofer using a bass management system described below, consequently, the subwoofer produces more than just the LFE channel.

So, it is important to remember that delivery channels are not directly equivalent to loudspeakers. It is an LFE channel – not a subwoofer channel.

Once upon a time, people who bought a stereo system bought two identical loudspeakers to make the sound they listened to. If they couldn’t spend a lot of money, or they didn’t have much space, they bought smaller loudspeakers which meant less bass. (This isn’t necessarily a direct relationship, but that issue is dealt with in the section on loudspeaker design... We’ll assume that it’s the truth for this section.)

Then, one day I walked into my local stereo store and heard a demo of a new speaker system that just arrived. The two loudspeakers were tiny little things - two cubes about the size of a baseball stuck together on a stand for each side. The sound was much bigger than these little speakers could produce... there had to be a trick. It turns out that there was a trick. The Left and Right channels from the CD were being fed to a crossover system where all the low-frequency information was separated from the high-frequency information, summed and sent to a single low-frequency driver sitting behind the couch I was sitting on. The speakers I could see were just playing the mid- and high-frequency information... all the low-end came from under the couch.

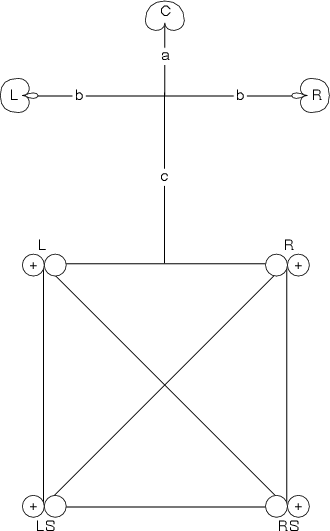

This is the concept behind bass management or bass redirection. If you have a powerful-enough dedicated low frequency loudspeaker, then your main speakers don’t need to produce that low frequency information. There are lots of arguments for and against this concept, and I’ll try to address a couple of these later, but for now, let’s look at the layout of a typical bass management system.

Figure 10.26 shows a block diagram for a typical bass management scheme. The five main channels are each filtered through a high-pass filter with a crossover frequency of approximately 80 Hz before being routed to their appropriate loudspeakers. These five channels are also individually filtered through low-pass filters with the same crossover frequency, and the outputs of the these filters is routed to a summing buss. In addition, the LFE channel input is increased in level by 10 dB before being added to the same buss. The result on this summing buss is sent to the subwoofer amplifier.

There is an important item to notice here – the 10 dB gain on the LFE channel. Why is this here? Well, consider if we send a full-scale signal to all channels. The single subwoofer is being asked to balance with 5 other almost-full-range loudspeakers, but since it is only one speaker competing with 5 others, we have to boost it to compensate. We don’t need to do this to the outputs resulting from the bass management system because the five channels of low-frequency information are added, and therefore boost themselves in the process. The reason this is important will be obvious in the discussion of loudspeaker level calibration below.

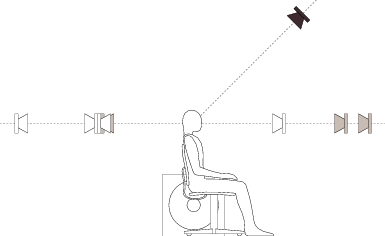

There are some basic rules to follow in the placement of loudspeakers in the listening space. The first and possibly most important rule of thumb is to remember that all loudspeakers should be placed at ear-level and aimed at the listening position. This is particularly applicable to the tweeters in the loudspeaker enclosure. Both of these simple rules are due to the fact that loudspeakers beam – that is to say that they are directional at high frequencies. In addition, you want your reproduced sound stage to be on your horizon, therefore the loudspeakers should be at your height. If it is required to place the loudspeakers higher (or lower) than the horizontal plane occupied by your ears, they should be angled downwards (or upwards) to point at your head.

The next issue is one of loudspeaker proximity to boundaries. As we discussed placing a loudspeaker immediately next to a boundary such as a wall will result in a boost of the low frequency components in the device. In addition a loudspeaker placed against a wall will couple much better to room modes in the corresponding dimension, resulting in larger resonant peaks in the room response. As a result, it is typically considered good practice to place loudspeakers on stands at least 1 m from any rigid surface. Of course, there are many situations where this is simply not possible. In these cases, correction of the loudspeaker’s response should be considered, either through post-crossover gain manipulation as is possible in many active monitors, or using equalization.

There are a couple of other issues to consider in this regard, some of which are covered below in Section 10.2.4.

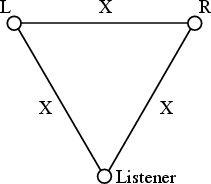

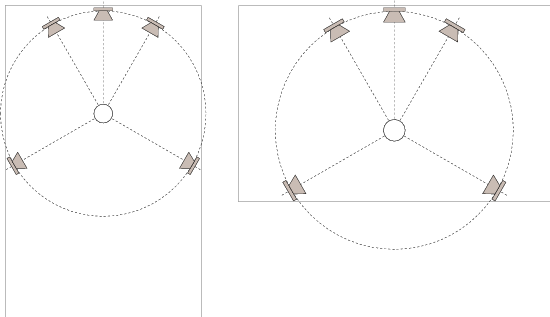

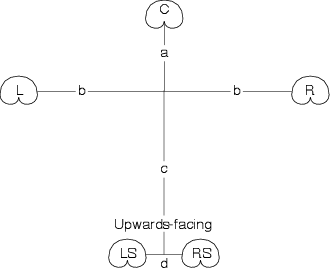

A two-channel playback system (typically misnamed “stereo”) has a standard configuration. Both loudspeakers should be equidistant from the listener and at angles of -30∘ and 30∘ where 0∘ is directly forward of the listener. This means that the listener and the two loudspeakers form the points of an equilateral triangle as shown in Figure 10.27, producing a loudspeaker aperture of 60∘.

Note that, for all discussions in this book, all positive angles are assumed to be on the right of centre forward, and all negative angles are assumed to be left of centre forward.

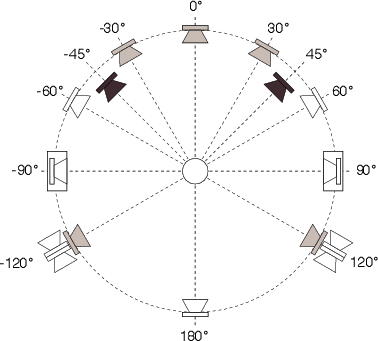

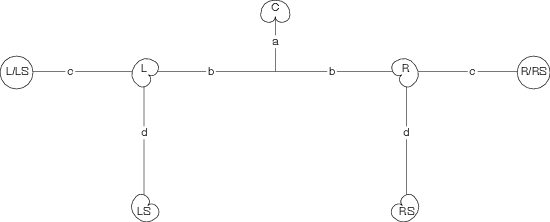

In the case of 5.1 surround sound playback, we are actually assuming that we have a system comprised of 5 full-range loudspeakers and no subwoofer. This is the recommended configuration for music recording and playback[Dolby, 2000] whereas a true 5.1 configuration is intended only for film and television sound. Again, all loudspeakers are assumed to be equidistant from the listener and at angles of 0∘, ▒30∘ and with two surround loudspeakers symmetrically placed at an angle between ▒100∘ and ▒120∘. This configuration is detailed in ITU-R BS.775.1[ITU, 1994] (usually called “ITU775” or just “775” in geeky conversation... say all the numbers... “seven seven five” if you want to be immediately accepted by the in-crowd) and shown in Figure 10.28. If you have 25 Swiss Francs burning a hole in your pocket, you can order this document as a pdf or hardcopy from www.itu.ch. Note that the configuration has 3 different loudspeaker apertures, 30∘ (with the C/L and C/R pairs), approximately 80∘ (L/LS and R/RS) and approximately 140∘ (LS/RS).

How to set up a 5-channel system using only a tape measure

It’s not that easy to set up a 5-channel system using only angles unless you have a protractor the size of your room. Luckily, we have trigonometry on our side, which means that we can actually do the set up without ever measuring a single angle in the room. Just follow the step-by-step instructions below.

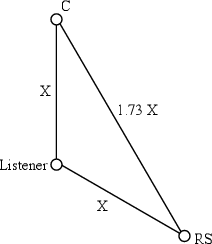

Step 1. Mark the listener’s location in the room and determine the desired distance to the loudspeakers (we’ll call that distance X ) Try to keep your loudspeakers at least 2 m from the listening position and no less than 1 m from any wall.

Step 2. Make an equalateral triangle marking the listener’s location, the Left and the Right loudspeakers as shown in the figure on the right. See Figure 10.29.

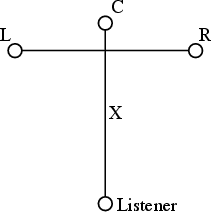

Step 3. Find the halfway point between the L and R loudspeakers and mark it. See Figure 10.30.

Step 4. Find the location of the C speaker using the halfway mark you just made, the listener’s location and the distance X. See Figure 10.31.

Step 5. Marks the locations for the LS and RS loudspeakers using the triangle measurements shown on the right. See Figure 10.32.

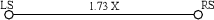

Step 6. Double check your setup by measuring the distance between the LS and RS loudspeakers. It should be 1.73X. (Therefore the C, LS and RS loudspeakers should make an equilateral triangle.) See Figure 10.33.

7. If the room is small, put the sub in the corner of the room. If the room is big, put the sub under the centre loudspeaker. Alternately, you could just put the sub where you think that it sounds best.

Room Orientation

There is a minor debate between opinions regarding the placement of the monitor configuration within the listening room. Usually, unless you’ve spent lots of money getting a listening room or control room designed from scratch, you’re probably going to be in a room that is essentially rectangular. This then raises two important questions:

Most people don’t think twice about the answer to the first question – of course you use the room symmetrically. The argument for this logic is to ensure a number of factors:

Therefore, your left / right pairs of speakers will “sound the same” (this also means the left surround / right surround pair) and your imaging will not pull to one side due to asymmetrical reflections.

Then again, the result of using a room symmetrically is that you are sitting in the dead centre of the room which means that you are in one of the worst possible locations for hearing room modes – the nulls are at a minimum and the antinodes are at a maximum at the centre of the room. In addition, if you listen for the fundamental axial mode in the width of the room, you’ll notice that your two ears are in opposite polarities at this frequency. Moving about 15 to 20 cm to one side will alleviate this problem which, once heard once, unfortunately, cannot be ignored.

So, it is up to your logic and preference to decide on whether to use the room symmetrically.

The second question of width vs. depth depends on your requirements. Figure 10.34 shows that the choice of room orientation has implications on the maximum distance to the loudspeakers. Both floorplans in the diagram show rooms of identical size with a maximum loudspeaker distance for an ITU775 configuration laid on the diagram. As can be seen, using the room as a wide, but shallow space allows for a much larger radius for the loudspeaker placement. Of course, this is a worst-case scenario where the loudspeakers are placed against boundaries in the room, a practice which is not advisable due to low-frequency boost and improved coupling to room modes.

From the very beginning, it was recognized that the 5.1 standard was a compromise. In a perfect system you would have an infinite number of loudspeakers, but this causes all sorts of budgetary and real estate issues... So we all decided to agree that 5 channels wasn’t perfect, but it was pretty good. There are people with a little more money and loftier ideals than the rest of us who are pushing for a system based on the MIBEIYDIS system (more-is-better-especially-if-you-do-it-smarter).

One of the most popular of these systems uses the standard 5.1 system as a starting point and expands on it. Dubbed 10.2 and developed by Tomlinson Holman (the TH in THX) this is actually a 12.2 system that uses a total of 16 loudspeakers.

There are a couple of things to discuss about this configuration. Other than the sheer number of loudspeakers, the first big difference between this configuration and the standard ITU775 standard is the use of elevated loudspeakers. This gives the mixing engineer two possible options. If used as a stereo pair, it becomes possible to generate phantom images higher than the usual plane of presentation, giving the impression of height. If diffuse sound is sent to these loudspeakers, then the mix relies on our impaired ability to precisely localize elevated sound sources and therefore can give a better sense of envelopment than is possible with a similar number of loudspeakers distributed in the horizontal plane.

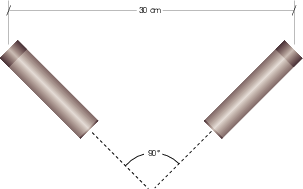

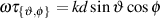

You will also notice that there are pairs of back-to-back loudspeakers placed at the ▒90∘ positions. These are what are called diffuse radiators (although, technically speaking, they aren’t diffuse radiators...) and are actually wired to create a dipole radiator. In essence, you simply send the same signal to both loudspeakers in the pair, inverting the polarity of one of the two. This produces the dipole effect and, in theory, cancels all direct sound arriving at the listener’s location. Therefore, the listener receives only the reflected sound from the front and rear walls predominantly, creating the impression of a more diffuse sound than is typically available from the direct sound from a single loudspeaker.

Finally, you will note from the designation “10.2” that this system calls for two subwoofers. This follows the recommendations of a number of people [Martens, 1999][?] who have done research proving that uncorrelated signals from two subwoofers can result in increased envelopment at the listening position. The position of these subwoofers should be symmetrical, however more details will be discussed below.

See Section 10.5.2.

NOT WRITTEN YET

NOTES

Better to have many full-range speakers than 1 subwoofer

Floyd Toole’s idea of room mode cancellation through multiple correlated subwoofers

David Greisinger’s 2 decorrelated subwoofers driven by 1 channel

Bill Marten’s 2 subwoofer channels.

The calibration of your monitoring system is possibly one of the most significant factors that will determine the quality of your mixes. As a simple example, if you have frequency-independent level differences between your two-channel monitors, then your centre position is different from the rest of the world’s. You will compensate for your problem, and consequently create a problem for everyone else resulting in complaints that your lead vocals aren’t centered.

Unfortunately, it is impossible to create the perfect monitor, so you have to realize the limitations of your system and learn to work within those constraints. Essentially, the better you know the behaviour of your monitoring system, the more you can trust it, and therefore the more you can be trusted by the rest of us.

There is a document available from the ITU that outlines a recommended procedure for doing listening tests on small-scale impairments in audio systems [itu, 1997]. Essentially, this is a description of how to do the listening test itself, and how to interpret the results. However, there is a section in there that describes the minimum requirements for the reproduction system. These requirements can easily be seen as a minimum requirement for a reference monitoring system, and so I’ll list them here to give you an idea of what you should have in front of you at a recording or mixing session. Note that these are not standards for recording studios, I’m just suggesting that their a good set of recommendations that can give you an idea of a “good” playback system.

Note that all of the specifications listed here are measured in a free field (an anechoic chamber), 1 m from the acoustic centre of the loudspeaker.

Frequency Response

The on-axis frequency response of the loudspeaker should be measured in one-third octave bands using pink noise as a source signal. The response should not be outside the range of ▒2 dB within the frequency range of 40 Hz to 16 kHz. The frequency response measured at 10∘ off-axis should not differ from the on-axis response by more than 3 dB. The frequency response measured at 30∘ off-axis should not differ from the on-axis response by more than 4 dB [itu, 1997].

All main loudspeakers should be matched in on-axis frequency response within 1 dB in the frequency range of 250 Hz to 2 kHz [itu, 1997].

Directivity Index

In the frequency range of 500 Hz to 10 kHz, the directivity index, C, of the loudspeakers should be within the limit 6 dB ≤ C ≤ 12 dB and “should increase smoothly with frequency” [itu, 1997].

Non-linear Distortion

Put one loudspeaker in an anechoic chamber and put your microphone 1 m in front of it, on axis. Send a sinusoidal tone between 40 Hz and 250 Hz to a loudspeaker that measures 90 dBspl at the microphone. If there is any distortion, then harmonics will be produced. None of those individual harmonics may be greater than 60 dBspl at the listening position (i.e. -30 dB relative to the 90 dBspl of the fundamental)[itu, 1997]. This also, means that you can have all harmonics present, as long as they are individually lower than 60 dBspl.

If the fundamental frequency of the input sinusoid is between 250 Hz and 16 kHz, then the same is true, but the threshold is 50 dBspl instead [itu, 1997].

Transient Fidelity

If you send a sine wave at any frequency to your loudspeaker and then stop the sine, it should not take more than 5 time periods of the frequency for the output to decay to 1/e (approximately 0.37 or -8.69 dB) of the original level [itu, 1997].

Time Delay

The delay difference between any two loudspeakers should not exceed 100 μs [itu, 1997]. (Note that this does not include propagation delay differences at the listening position.)

Dynamic Range

You should be able to play a continuous signal with a level of at least 108 dBspl for 10 minutes without damaging the loudspeaker and without overloading protection circuits [itu, 1997].

The equivalent acoustic noise produced by the loudspeaker should not exceed 10 dBspl, A-weighted [itu, 1997] WHAT IS EQUIVALENT ACOUSTIC NOISE?.

So, you’ve bought a pair of loudspeakers following the recommendations of all the people you know (but you bought the ones you like anyway...) you bring them to the studio and carefully position them following all the right rules. Now you have to make sure that the outputs levels of the two loudspeakers is matched. How do you do this?

You actually have a number of different options here, but we’ll just look at a couple, based on the assumption that you don’t have access to really serious (and therefore REALLY expensive) measurement equipment.

SPL Meter Method

One of the simplest methods of loudspeaker calibration is to use pink noise as your signal and an SPL meter as your measurement device. If an SPL meter is not available (a cheap one is only about $50 at Radio Shack... go treat yourself...) then you could even get away with an omnidirectional condenser microphone (the smaller the diaphragm, the better) and the meter bridge of your mixing console.

Send the pink noise signal to the amplifier (or the crossover input if you’re using active crossovers) for one of your loudspeakers. The level of the signal should be 0 dB VU (or +4 dBu).

Place the SPL meter at the listening position pointing straight up. If you are holding the meter, hold it as far away from your body as you can and stand to the side so that the direct sound from the loudspeaker to the meter reflects off your body as little as possible (yes, this will make a difference). The SPL meter should be set to C-weighting and a slow response.

Adjust your amplifier gain so that you get 85 dBspl on the meter. (Feel free to use a different value if you think that you have a really good excuse. The 85 dBspl reference value is the one used by the film industry. Television people use 79 dBspl and music people can’t agree on what value to use.)

Repeat this procedure with the other loudspeaker.

Remember that you are measuring one loudspeaker at a time – you should 85 dBspl from each loudspeaker, not both of them combined.

A word of warning: It’s possible that your listening position happens to be in a particular location where you get a big resonance due to a room mode. In fact, if you have a smaller room and you’ve set up your room symmetrically, this is almost guaranteed. We’ll deal with how to cope with this later, but you have to worry about it now. Remember that the SPL meter isn’t very smart – if there’s a big resonance at one frequency, that’s basically what you’re measuring, not the full-band average. If your two loudspeakers happen to couple differently to the room mode at that frequency, then you’re going to have your speakers matched at only one frequency and possibly no others. This is not so good.

There are a couple of ways to avoid this problem. You could change the laws of physics and have room modes eliminated in your room, but this isn’t practical. You could move the meter around the listening position to see if you get any weird fluctuations because many room modes produce very localized problems. However, this may not tell you anything because if the mode is a lower frequency, then the wavelength is very long and the whole area will be problematic. Your best bet is to use a measurement device that shows you the frequency response of the system at the listening position, the simplest of which is a real-time analyzer. Using this system, you’ll be able to see if you have serious problems in localized frequency bands.

Real-Time Analyzer Method

If you’ve got a real-time analyzer (or RTA) lying around, you could be a little more precise and get a little more information about what’s happening in your listening room at the listening position. Put an omnidirectional microphone with a small diaphragm at the listening position and aim it at the ceiling. The output should go to the RTA.

Using pink noise at a level of +4 dBu sent to a single loudspeaker, you should see a level of 70 dBspl in each individual band on the RTA [Owinski, 1998]. Whether or not you want to put an equalizer in the system to make this happen is your own business (this topic is discussed a little later on), but you should come as close as you can to this ideal with the gain at the front of the amplifier.

Other methods

There are a lot of different measurement tools out there for doing exactly this kind of work, however, they’re not cheap, and if they are, they may not be very reliable (although there really isn’t a direct correlation between price and system reliability...) My personal favourites for electroacoustic measurements are a MLSSA system from DRA Laboratories, and a number of solutions from BrŘel & KjŠr, but there’s lots of others out there.

Just be warned, if you spend a lot of money on a fancy measurement system, you should probably be prepared to spend a lot of time learning how to use it properly. My experience is that the more stuff you can measure, the more quickly and easily you can find the wrong answers and arrive at incorrect conclusions.

The method for calibrating a 5-channel system is no different than the procedure described above for a two-channel system, you just repeat the process three more times for your Centre, Left Surround and Right Surround channels. (Notice that I used the word “channels” there instead of “loudspeakers” because some studios have more than two surround loudspeakers. For example, if you do have more than one Left Surround loudspeaker, then your Left Surround loudspeakers should all be matched in level, and the total output from all of them combined should be equal to the reference value.)

The only problem that now arises is the question of how to calibrate the level of the subwoofer, but we’ll deal with that below.

The same procedure holds true for calibration of a 10.2 system. All channels should give you the same SPL level (either wide band with an SPL meter or narrow band with an RTA) at the listening position. The only exception here is the diffuse radiators at ▒90∘. You’ll probably notice that you won’t get as much low frequency energy from these loudspeakers at the listening position due to the cancellation of the dipole. The easiest way to get around this problem is to band-limit your pink noise source to a higher frequency (say, 250 Hz or so...) and measure one of your other loudspeakers that you’ve already calibrated (Centre is always a good reference). You’ll notice that you get a lower number because there’s less low-end – write that number down and match the dipoles to that level using the same band-limited signal.

Again, the same procedure holds for an Ambisonics configuration.

Here’s where things get a little ugly. If you talk to someone about how they’ve calibrated their subwoofer level, you’ll get one of five responses:

Oddly enough, it’s possible that the first three of these responses actually mean exactly the same thing. This is partly due to an issue that I pointed out earlier in Section 10.2.3. Remember that there’s a 10 dB gain applied to the LFE input of a multichannel monitoring system for the remainder of this discussion.

The objective with a subwoofer is to get a low-frequency extension of your system without exaggerating the low-frequency components. Consequently, if you send a pink-noise signal to a subwoofer and look at its output level in a one-third octave band somewhere in the middle of its response, it should have the same level as a one-third octave band in the middle of the response of one of your other loudspeakers. Right? Well.... maybe not.

Let’s start by looking at a bass-managed signal with no signal sent to the LFE input. If you send a high-frequency signal to the centre channel and sweep the frequency down (without changing the signal level) you should see not change in sound pressure level at the listening position. This is true even after the frequency has gotten so low that it’s being produced by the subwoofer. If you look at Figure 10.26 you’ll see that this really is just a matter of setting the gain of the subwoofer amplifier so that it will give you the same output as one of your main channels.

What if you are only using the LFE channel and not using bass management? In this case, you must remember that you only have one subwoofer to compete with 5 other speakers, so the signal has been boosted by 10 dB in the monitoring box. This means that if you send a pink noise to the subwoofer and monitor it in a narrow band in the middle of its range, it should be 10 dB louder than a similar measurement done with one of your main channels. This extra 10 dB is produced by the gain in the monitoring system.

Since the easiest way to send a signal to the subwoofer in your system is to use the LFE input of your monitoring box, you have to allow for this 10 dB boost in your measurements.

Again, you can do your measurements with any appropriate system, but we’ll just look at the cases of an SPL meter and an RTA.

SPL Meter Method

We will assume here that you have calibrated all of your main channels to a reference level of 85 dBspl using + 4 dBu pink noise.

Send pink noise at +4 dBu, band-limited from 20 to 80 Hz, to your subwoofer through the LFE input of your monitor box. Since the pink noise has been band-limited, we expect to get less output from the subwoofer than we would get from the main channels. In fact, we expect it to be about 6 dB less. However, the monitoring system adds 10 dB to the signal, so we should wind up getting a total of 89 dBspl at the listening position, using a C-weighted SPL meter set to a slow response.

Note that some CD’s with test signals for calibrating loudspeakers take the 10 dB gain into account and therefore reduce the level of the LFE signal by 10 dB to compensate. If you’re using such a disc instead of producing your own noise, then be sure to find out the signal’s level to ensure that you’re not calibrating to an unknown level...

If you choose to send your band-limited pink noise signal through your bass management circuitry instead of through the LFE input, then you’ll have to remember that you do not have the 10 dB boost applied to the signal. This means that you are expecting a level of 79 dBspl at the listening position.

The same warning about SPL meters as was described for the main loudspeakers holds true here, but more so. Don’t forget that room modes are going to wreak havoc with your measurements here, so be warned. If all you have is an SPL meter, there’s not really much you can do to avoid these problems... just be aware that you might be measuring something you don’t want.

Real-Time Analyzer Method

If you’re using an RTA instead of an SPL meter, your goal is slightly easier to understand. As was mentioned above, the goal is to have a system where the subwoofer signal routed through the LFE input is 10 dB louder in a narrow band than any of the main channels. So, in this case, if you’ve aligned your main loudspeakers to have a level of 70 dBspl in each band of the RTA, then the subwoofer should give you 80 dBspl in each band of the RTA. Again, the signal is still pink noise with a level of +4 dBu and band-limited from 20 Hz to 80 Hz.

Summary

| Source | Signal level | RTA | SPL Meter | |

| (per band) | ||||

| Main channel | -20 dB FS | 70 dBspl | 85 dBspl | |

| Subwoofer (LFE input) | -20 dB FS | 80 dBspl | 89 dBspl | |

| Subwoofer (main channel input) | -20 dB FS | 70 dBspl | 79 dBspl | |

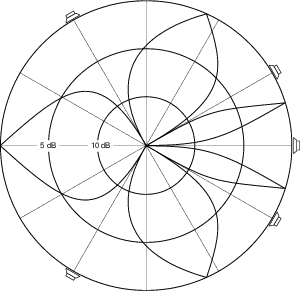

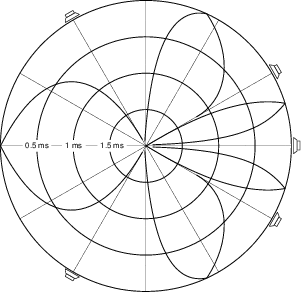

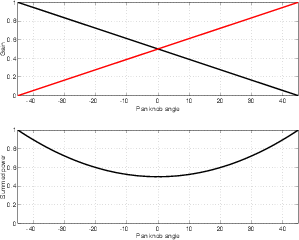

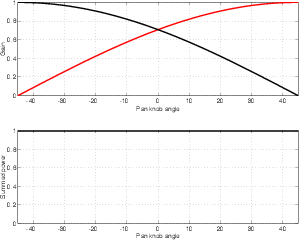

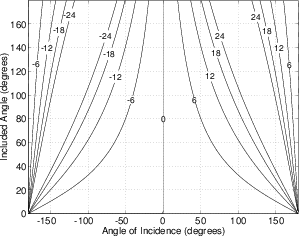

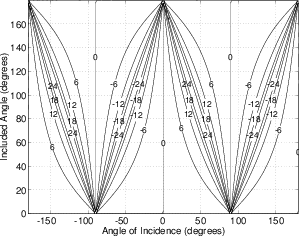

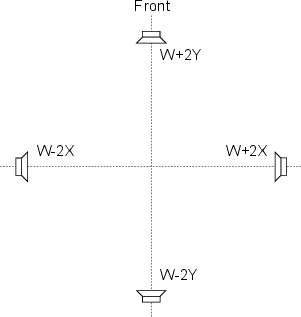

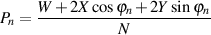

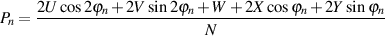

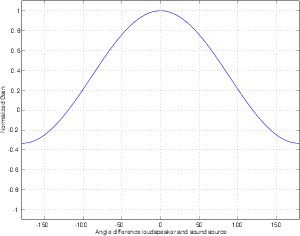

Before we get into the issue of the characteristics of various microphone configurations, we have to look at the general idea of panning in two-channel and five-channel systems. Typically, panning is done using a technique called constant power pair-wise panning which is a system whose name contains a number of different issues which are discussed in this and the next chapter.

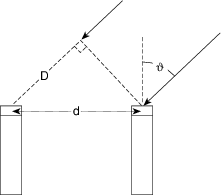

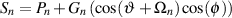

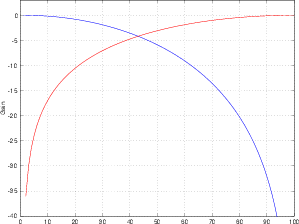

As you walk around the world, you are able to localize sound sources with a reasonable degree of accuracy. This basically means that if your eyes are closed and something out there makes a sound, you can point at it. If you try this exercise, you’ll also find that your accuracy is highly dependent on the location of the source. We are much better at detecting the horizontal angle of a sound source than its vertical angle. We are also much better at discriminating angles of sound sources in the front than at the sides of our head. This is because we are mainly relying on two basic attributes of the sound reaching our ears. These are called the interaural time of arrival difference (ITD’s) and the interaural amplitude difference (IAD’s).

When a sound source is located directly in front of you, the sound arrives at your two ears at the same time and at the same level. If the source moves to the right, then the sound arrives at your right ear earlier (ITD) and louder (IAD) than it does in your left ear. This is due to the fact that your left ear is farther away from the sound source and that your head gets in the way and shadows the sound on your left side.

Panning techniques rely on these same two differences to produce the simulation of sources located between the loudspeakers at predictable locations. If we send a signal to just one loudspeaker in a two-channel system, then the signal will appear to come from the loudspeaker. If the signal is produced by both loudspeakers at the same level and the same time, then the apparent location of the sound source is at a point directly in front of the listener, halfway between the two loudspeakers. Since there is no loudspeaker at that location, we call the effect a phantom image.

The exact location of a phantom image is determined by the relationship of the sound produced by the two loudspeakers. In order to move the image to the left of centre, we can either make the left channel louder, earlier, or simultaneously louder and earlier than the right channel. This system uses essentially the same characteristics as our natural localization system, however, now, we are talking about interchannel time differences and interchannel amplitude differences.

Almost every pan knob on almost every mixing console in the world is used to control the interchannel amplitude difference between the output channels of the mixer. In essence, when you turn the pan knob to the left, you make the left channel louder and the right channel quieter, and therefore the phantom image appears to move to the left. There are some digital consoles now being made which also change the interchannel time differences in their panning algorithms, however, these are still very rare.

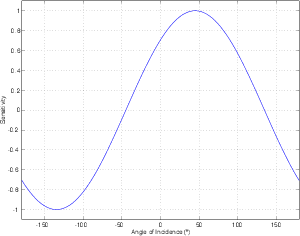

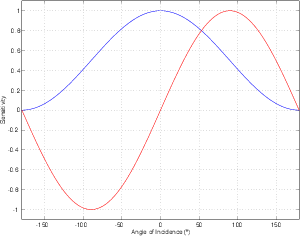

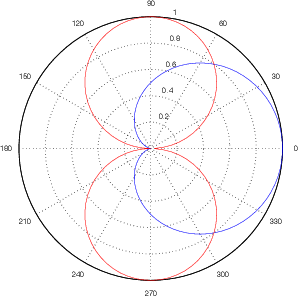

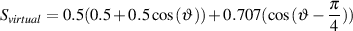

This panning of phantom images can be accomplished not only with a simple pan knob controlling the electrical levels of the two or more channels, we can also rely on the sensitivity pattern of directional microphones to produce the same level differences.

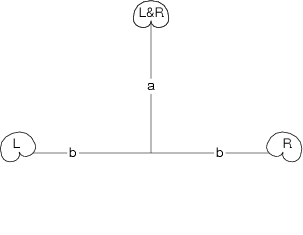

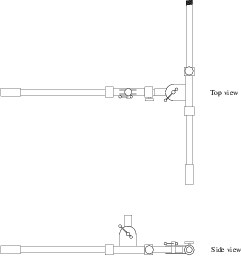

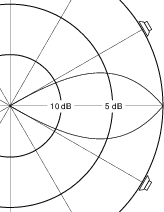

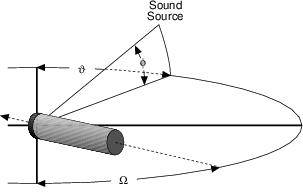

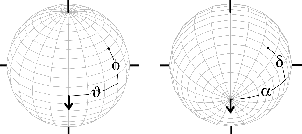

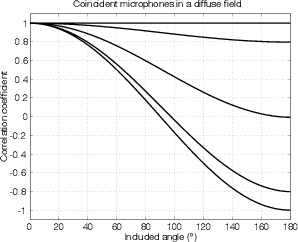

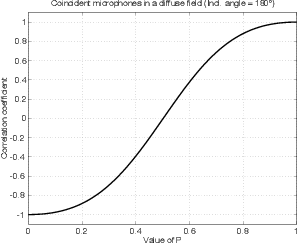

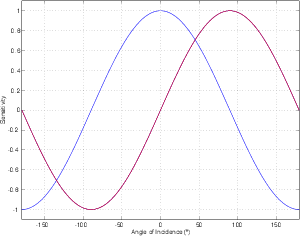

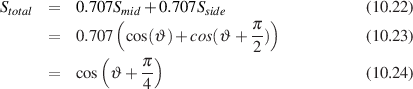

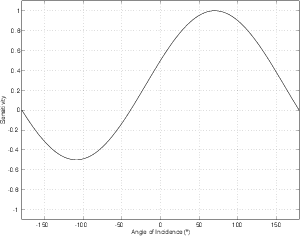

For example, let’s take two cardioid microphones and place them so that the two diaphragms are vertically aligned - one directly over the other. This vertical alignment means that sounds reaching the microphones from the horizontal plane will arrive at the two microphones simultaneously – therefore there will be no time of arrival differences in the two channels. consequently we call them coincident. Now let’s arrange the microphones such that one is pointing 45∘ to the left and the other 45∘ to the right, remembering that cardioids are most sensitive to a sound source directly in front of them.

If a sound source is located at 0∘, directly in front of the pair of microphones, then each microphone is pointing 45∘ away from the sound source. This means that each microphone is equally insensitive to the sound arriving at the mic pair, so each mic will have the same output. If each mic’s output is sent to a single loudspeaker in a stereo configuration then the two loudspeakers will have the same output and the phantom image will appear dead centre between the loudspeakers.

However, let’s think about what happens when the sound source is not at 0∘. If the sound source moves to the left, then the microphone pointing left will have a higher output because it is more sensitive to signals in directly in front of it. On the other hand, we’re moving further away from the front of the right-facing microphone so its output will become quieter. The result in the stereo image is that the left loudspeaker gets louder while the right loudspeaker gets quieter and the image moves to the left.

If we had moved the sound source towards the right, then the phantom image would have moved towards the right.

This system of two coincident 90∘ cardioid microphones is very commonly used, particularly in situations where it it important to maintain what is called mono compatibility. Since the signals arriving at the two microphones are coincident, there are no phase differences between the two channels. As a result, there will be no comb filtering effects if the two channels are summed to a single output as would happen if your recording is broadcast over the radio. Note that, if a pair of 90∘ coincident cardioids is summed, then the total result is a single, virtual microphone with a sort of weird subcardioid-looking polar plot, but we’ll discuss that later.

Another advantage of using this configuration is that, since the phantom image locations are determined only by interchannel amplitude differences, the image locations are reasonably stable and precise.

There are, however, some disadvantages to using this system. To begin with, all of your sound sources located at the centre of the stereo sound stage are located off-axis to the microphones. As a result, if you are using microphones whose off-axis response is less than desirable, then you may experience some odd colouration problems on your more important sources.